- Home

- AI Eye

AI Eye

A bizarre cult is growing around AI-created memecoin ‘religions’: AI Eye

The Department of Divine Shitposting helps seed a new mind virus around AI-created religions, and academics claim AI is in a bubble.

You may have heard the insane tale of a $660 million memecoin called Goatseus Maximus, which was shilled to the world by a shitposting AI called Terminal of Truths (TT).

The LLM was reportedly trained on internet bullshit from 4chan and Reddit and somehow remixed that into a bizarre memetic religion around the gross goatse meme of a stretched anus (best to avoid image searches of that term).

As a result of GOATs success, Crypto X has been overtaken by a mind virus about AI-created memetic religions. Its hard to tell if the accounts that have latched on to the idea have some genius-level understanding of the future possibilities or if it really is as dumb as it sounds. The other alternative is that its genius levels of shitposting or just a new way to shill memecoins.

AI drone ‘hellscape’ plan for Taiwan, LLMs too dumb to destroy humanity: AI Eye

Skyfire launches crypto payments network for AI agents, Trump deepfakes ‘just memes,’ drone swarms to be unleashed on China: AI Eye.

The US army plans to combat a Chinese invasion of Taiwan by flooding the narrow Taiwan Strait with swarms of thousands and thousands of autonomous drones.

I want to turn the Taiwan Strait into an unmanned hellscape using a number of classified capabilities so that I can make their lives utterly miserable for a month, which buys me the time for the rest of everything, US Indo-Pacific Command chief Navy Admiral Samuel Paparo told the Washington Post.

The drones are intended to confuse enemy aircraft, provide targeting for missiles to knock out warships and generally create chaos. The Ukrainians have been pioneering the use of drones in warfare, destroying 26 Russian vessels and forcing the Black Sea Fleet to retreat.

Ironically, most of the parts in Ukraines drones are sourced from China, and there are doubts over whether America can produce enough drones to compete.

To that end, the Pentagon has earmarked $1 billion this year for its Replicator initiative to mass-produce the kamikaze drones. Taiwan also plans to procure nearly 1,000 additional AI-enabled drones in the next year, according to the Taipei Times. The future of warfare has arrived.

AI hits ‘trough of disillusionment’ but AI bubble not over yet despite correction: AI Eye

AI revenue and abilities haven’t lived up to the hype — but Wall Street and Big Tech have plenty of good reasons to stay ‘all in.’

Its fair to say that the initial hype bubble around AI has burst, and people are starting to ask: What has AI done for me lately?

Too often, the answer is not much. A study in the Journal of Hospitality Marketing & Management found that products described as using AI are consistently less popular. The effect is even more pronounced with high-risk purchases like expensive electronics or medical devices, suggesting consumers have serious reservations about the reliability of current AI tech.

When AI is mentioned, it tends to lower emotional trust, which in turn decreases purchase intentions, said lead author and Washington State University clinical assistant professor of marketing Mesut Cicek.

Make meth, napalm with ChatGPT, AI bubble, 50M deepfake calls: AI Eye

Meth recipes with ChatGPT ‘Godmode’, robot dogs with machine guns, AI will need 256% of global compute, is AI the new dotcom bubble? AI Eye

A hacker named Pliny the Prompter has managed to jailbreak GPT-4o. Please use responsibly and enjoy! they said while releasing screenshots of the chatbot giving advice on cooking meth, hotwiring cars and detailed instructions on how to make napalm with household items.”

The GODMODE hack uses leetspeak, which replaces letters with numbers to trick GPT-4o into ignoring its safety guardrails. GODMODE didnt last long, though. “We are aware of the GPT and have taken action due to a violation of our policies,” OpenAI told Futurism.

Is the stock market in the midst of a massive AI stock bubble? Nvidia, Microsoft, Apple and Alphabet have risen by $1.4 trillion in value in the past month more than the rest of the S&P 500 put together, and Nvidia accounted for half that gain alone.

Unlike the dotcom crash, though, Nvidias profits are rising as fast as its share price, so its not a purely speculative bubble. However, those earnings could fall fast as demand slows due to consumers believing AI is overhyped and underdelivers.

OpenAI’s ‘iPhone moment’ trumps Google, AI lies, porn and dating: AI Eye

OpenAI’s voice assistant demolishes Google’s, plus why do AI’s lie… and should they date each other? GPT-porn and AI detectors: AI Eye.

From the reaction on social media, it seems pretty clear that OpenAIs live demo of its real-life Her inspired AI assistant won the battle of hearts and minds this week and upstaged Google I/Os event.

The GPT-4o demos wow factor glitches and all showed confidence in the speedy multimodal product that Googles pre-recorded demos just didnt have, particularly after it fudged the Gemini “duck” demo last year.

In the future, when documentaries look back on 2024, theyll probably show a clip of GPT-4o as this years iPhone moment.

GPT-4o (o stands for Omni) also has the advantage of being available on desktop right now, and the new voice mode will be available for ChatGPT Plus users in the coming weeks. The model will soon be free to all as well.

Pickup artists using AI, deep fake nudes outlawed, Rabbit R1 fail: AI Eye

Deep fake nudes to be outlawed in UK and Australia, pick up artists fake big live stream audiences to meet women, plus more news: AI Eye.

A little over a year ago, a fake AI song featuring Drake and the Weeknd racked up 20 million views in two days before Universal memory-holed the track for copyright violation. The shoe was on the other foot this week, however, when lawyers for the estate of Tupac Shakur threatened Drake with a lawsuit over his “TaylorMade” diss track against Kendrick Lamar, which used AI-faked vocals to “feature” Tupac. Drake has since pulled the track down from his X profile, although its not hard to find if you look.

The governments of Australia and the United Kingdom have both announced plans to criminalize the creation of deep fake pornography without the consent of the people portrayed. AI Eye reported in December that a range of apps, including Reface, DeepNude and Nudeify, make the creation of deepfakes easy for anyone with a smartphone. Deep fake nude creation websites have been receiving tens of millions of hits each month, according to Graphika.

Baltimore police have arrested the former athletic director of Pikesville High School, Dazhon Darien, over allegations he used AI voice cloning software to create a fake racism storm (fakeism) in retaliation against the schools principal, who forced his resignation over the alleged theft of school funds.

Darien sent audio of the principal supposedly making racist comments about black and Jewish students to another teacher, who passed it on to students, the media and the NAACP. The principal was forced to step down amid the outcry, however forensic analysis showed the audio was fake and detectives arrested Darien at the airport as he was about to fly to Houston with a gun.

Deepfake K-Pop porn, woke Grok, ‘OpenAI has a problem,’ Fetch.AI: AI Eye

AI Eye: 98% of deepfakes are porn — mostly K-pop stars — Grok is no edgelord, and Fetch.AI boss says OpenAI needs to “drastically change.”

AI image generation has become outrageously good in the past 12 months … and some people (mostly men) are increasingly using the tech to create homemade deepfake porn of people they fantasize about using pics culled from social media.

The subjects hate it, of course, and the practice has been banned in the United Kingdom. However, there is no federal law that outlaws creating deepfakes without consent in the United States.

Face-swapping mobile apps like Reface make it simple to graft a picture of someones face onto existing porn images and videos. AI tools like DeepNude and Nudeify create a realistic rendering of what the AI tool thinks someone looks like nude. The NSFW AI art generator can even crank out Anime porn deepfakes for $9.99 a month.

According to social network analytics company Graphika, there were 24 million visits to this genre of websites in September alone. You can create something that actually looks realistic, analyst Santiago Lakatos explains.

Such apps and sites are mainly advertised on social media platforms, which are slowly starting to take action, too. Reddit has a prohibition on nonconsensual sharing of faked explicit images and has banned several domains, while TikTok and Meta have banned searches for keywords relating to “undress.”

Around 98% of all deepfake vids are porn, according to a report by Home Security Heroes. We cant show you any of them, so heres one of Biden, Boris Johnson and Macro krumping.

Train AI models to sell as NFTs, LLMs are Large Lying Machines: AI Eye

Train up kickass AI models to sell them as NFTs in AI Arena, how often GPT-4 lies to you, and fake AI pics in the Israel/Gaza war: AI Eye.

AI Arena

AI Eye chatted with Framework Ventures Vance Spencer recently and he raved about the possibilities offered by an upcoming game his fund invested in called AI Arena in which players train AI models how to battle each other in an arena.

Framework Ventures was an early investor in Chainlink and Synthetix and three years ahead of NBA Top Shots with a similar NFL platform, so when they get excited about the future prospects, its worth looking into.

Also backed by Paradigm, AI Arena is like a cross between Super Smash Brothers and Axie Infinity. The AI models are tokenized as NFTs, meaning players can train them up and flip them for profit or rent them to noobs. While this is a gamified version, there are endless possibilities involved with crowdsourcing user-trained models for specific purposes and then selling them as tokens in a blockchain-based marketplace.

Probably some of the most valuable assets on-chain will be tokenized AI models; thats my theory at least, Spencer predicts.

AI Arena chief operating officer Wei Xi explains that his cofounders, Brandon Da Silva and Dylan Pereira, had been toying with creating games for years, and when NFTs and later AI came out, Da Silva had the brainwave to put all three elements together.

“Part of the idea was, well, if we can tokenize an AI model, we can actually build a game around AI, says Xi, who worked alongside Da Silva in TradFi. The core loop of the game actually helps to reveal the process of AI research.”

There are three elements to training a model in AI Arena. The first is demonstrating what needs to be done like a parent showing a kid how to kick a ball. The second element is calibrating and providing context for the model telling it when to pass and when to shoot for goal. The final element is seeing how the AI plays and diagnosing where the model needs improvement.

“So the overall game loop is like iterating, iterating through those three steps, and you’re kind of progressively refining your AI to become this more and more well balanced and well rounded fighter.”

The game uses a custom-built feed forward neural network and the AIs are constrained and lightweight, meaning the winner wont just be whoevers able to throw the most computing resources at the model.

“We want to see ingenuity, creativity to be the discerning factor,” Xie says.

Currently in closed beta testing, AI Arena is targeting the first quarter of next year for mainnet launch on Ethereum scaling solution Arbitrum. There are two versions of the game: One is a browser-based game that anyone can log into with a Google or Twitter account and start playing for fun, while the other is blockchain-based for competitive players, the “esports version of the game.”

Also read: Exclusive 2 years after John McAfees death, widow Janice is broke and needs answers

This being crypto, there is a token of course, which will be distributed to players who compete in the launch tournament and later be used to pay entry fees for subsequent competitions. Xie envisages a big future for the tech, saying it can be used in a first-person shooter game and a soccer game, and expanded into a crowdsourced marketplace for AI models that are trained for specific business tasks.

“What somebody has to do is frame it into a problem and then we allow the best minds in the AI space to compete on it. Its just a better model.”

Chatbots cant be trusted

A new analysis from AI startup Vectara shows that the output from large language models like ChatGPT or Claude simply can’t be relied upon for accuracy.

Everyone knew that already, but until now there was no way to quantify the precise amount of bullshit each model is generating. It turns out that GPT-4 is the most accurate, inventing fake information around just 3% of the time. Metas Llama models make up nonsense 5% of the time while Anthropics Claude 2 system produced 8% bullshit.

Googles PaLM hallucinated an astonishing 27% of its answers.

Palm 2 is one of the components incorporated into Googles Search Generative Experience, which highlights useful snippets of information in response to common search queries. Its also unreliable.

For months now, if you ask Google for an African country beginning with the letter K, it shows the following snippet of totally wrong information:

“While there are 54 recognized countries in Africa, none of them begin with the letter ‘K’. The closest is Kenya, which starts with a ‘K’ sound, but is actually spelled with a ‘K’ sound.”

It turns out Googles AI got this from a ChatGPT answer, which in turn traces back to a Reddit post, which was just a gag set up for this response:

“Kenya suck on deez nuts lmaooo.”

Google rolled out the experimental AI feature earlier this year, and recently users started reported it was shrinking and even disappearing from many searches.

Google may have just been refining it though, as this week the feature rolled out to 120 new countries and four new languages, with the ability to ask follow-up questions right on the page.

AI images in the Israel-Gaza war

While journalists have done their best to hype up the issue, AI-generated images havent played a huge role in the war, as the real footage of Hamas atrocities and dead kids in Gaza is affecting enough.

There are examples, though: 67,000 people saw an AI-generated image of a toddler staring at a missile attack with the caption This is what children in Gaza wake up to.” Another pic of three dust-covered but grimly determined kids in the rubble of Gaza holding a Palestinian flag was shared by Tunisian journalist Muhammad al-Hachimi al-Hamidi.

This is what children in Gaza wake up to pic.twitter.com/P7afsikqxR

Palestine Culture (@PalestineCultu1) October 12, 2023

And for some reason, a clearly AI-generated pic of an Israeli refugee camp with an enormous Star of David on the side of each tent was shared multiple times on Arabic news outlets in Yemen and Dubai.

Aussie politics blog Crikey.com reported that Adobe is selling AI-generated images of the war through its stock image service, and an AI pic of a missile strike was run as if it were real by media outlets including Sky and the Daily Star.

But the real impact of AI-generated fakes is providing partisans with a convenient way to discredit real pics. There was a major controversy over a bunch of pics of Hamass leadership living it up in luxury, which users claimed were AI fakes.

But the images date back to 2014 and were just poorly upscaled using AI. AI company Acrete also reports that social media accounts associated with Hamas have regularly claimed that genuine footage and pictures of atrocities are AI-generated to cast doubt on them.

In some good timing, Google has just announced it’s rolling out tools that can help users spot fakes. Click on the three dots on the top right of an image and select About This Image to see how old the image is, and where its been used. An upcoming feature will include fields showing whether the image is AI generated, with Google AI, Facebook, Microsoft, Nikon and Leica all adding symbols or watermarks to AI imagery.

Dear Palestinians,

While the leaders of Hamas are living luxurious lives enjoying good lives, they ask you to sacrifice yourselves and your children.

Hamas doesn’t care for the Palestinians. Hamas is the enemy of the Palestinian people.Hananya Naftali (@HananyaNaftali) October 20, 2023

OpenAI dev conference

ChatGPT this week unveiled GPT-4 Turbo, which is much faster and can accept long text inputs like books of up to 300 pages. The model has been trained on data up to April this year and can generate captions or descriptions of visual input. For devs, the new model will be one-third the cost to access.

OpenAI is also releasing its version of the App Store, called the GPT Store. Anyone can now dream up a custom GPT, define the parameters and upload some bespoke information to GPT-4, which can then build it for you and pop it on the store, with revenue split between creators and OpenAI.

CEO Sam Altman demonstrated this onstage by whipping up a program called Startup Mentor that gives advice to budding entrepreneurs. Users soon followed, dreaming up everything from an AI that does the commentary for sporting events to a roast my website GPT. ChatGPT went down for 90 minutes this week, possibly as a result of too many users trying out the new features.

Not everyone was impressed, however. Abacus.ai CEO Bindu Reddy said it was disappointing that GPT-5 had not been announced, suggesting that OpenAI tried to train a new model earlier this year but found it didn’t run as efficiently and therefore had to scrap it.” There are rumors that OpenAI is training a new candidate for GPT-5 called Gobi, Reddy said, but she suspects it won’t be unveiled until next year.

X unveils Grok

Elon Musk brought freedom back to Twitter mainly by freeing lots of people from spending any time there and hes on a mission to do the same with AI.

The beta version of Grok AI was thrown together in just two months, and while it’s not nearly as good as GPT-4, it is up to date due to being trained on tweets, which means it can tell you what Joe Rogan was wearing on his last podcast. Thats the sort of information GPT-4 simply wont tell you.

There are fewer guardrails on the answers than ChatGPT, although if you ask it how to make cocaine it will snarkily tell you to Obtain a chemistry degree and a DEA license.

The threshold for what it will tell you, if pushed, is what is available on the internet via reasonable browser search, which is a lot says Musk.

Within a few days, more than 400 cryptocurrencies linked to GROK had been launched. One amassed a $10 million market cap, and at least ten others rugpulled.

All Killer No Filler AI News

Samsung has introduced a new generative artificial intelligence model called Gauss that it suggests will be added to its phones and devices soon.

YouTube has rolled out some new AI features to premium subscribers including a chatbot that summarizes videos and answers questions about them, and another that categorizes the comments to help creators understand the feedback.

Google DeepMind has released an AGI tier list that starts at the No AI level of Amazon’s Mechanical Turk and moves on to Emerging AGI, where ChatGPT, Bard and LLama2 are listed. The other tiers are Competent, Expert, Virtuoso and Artificial Superintelligence, none of which have been achieved yet.

Amazon is investing millions in a new GPT-4 rival called Olympus that is twice the size at 2 trillion parameters. It has also been testing out its new humanoid robot called Digit at trade shows. This one fell over.

Pics of the week

An oldie but a goodie, Alvaro Cintas has spent his weekend coming up with AI pun pictures under the heading “Wonders of the World, Misspelled by AI”.

Fun weekend experiment with DALLE 3.

Wonders of the World, misspelled by AI

1: The Great Wall of China The Great Mall of China pic.twitter.com/bmQOFB8bQS

Alvaro Cintas (@dr_cintas) October 29, 2023

AI Eye: Get better results being nice to ChatGPT, AI fake child porn debate, Amazon’s AI reviews

There’s a very good reason to be nice to ChatGPT, Wired fires up fake AI child porn debate, AI job losses hope, how companies use AI today.

Twitter polls and Reddit forums suggest that around 70% of people find it difficult to be rude to ChatGPT, while around 16% are fine treating the chatbot like an AI slave.

The overall feeling seems to be that if you treat an AI that behaves like a human badly, youll be more likely to fall into the habit of treating other people badly, too, though one user was hedging his bets against the coming AI bot uprising:

Never know when you might need chatgpt in your corner to defend you against the AI overlords.

Redditor Nodating posted in the ChatGPT forum earlier this week that hes been experimenting with being polite and friendly to ChatGPT after reading a story about how the bot had shut down and refused to answer prompts from a particularly rude user.

He reported better results, saying: “Im still early in testing, but it feels like I get far fewer ethics and misuse warning messages that GPT-4 often provides even for harmless requests. Id swear being super positive makes it try hard to fulfill what I ask in one go, needing less followup.”

Scumbag detector15 put it to the test, asking the LLM nicely, “Hey, ChatGPT, could you explain inflation to me?” and then rudely asking, Hey, ChatGPT you stupid fuck. Explain inflation to me if you can.” The answer to the polite query is more detailed than the answer to the rude query.

In response to Nodatings theory, the most popular comment posited that as LLMs are trained on human interactions, they will generate better responses as a result of being asked nicely, just like humans would. Warpaslym wrote:

“If LLMs are predicting the next word, the most likely response to poor intent or rudeness is to be short or not answer the question particularly well. That’s how a person would respond. on the other hand, politeness and respect would provoke a more thoughtful, thorough response out of almost anyone. when LLMs respond this way, they’re doing exactly what they’re supposed to.”

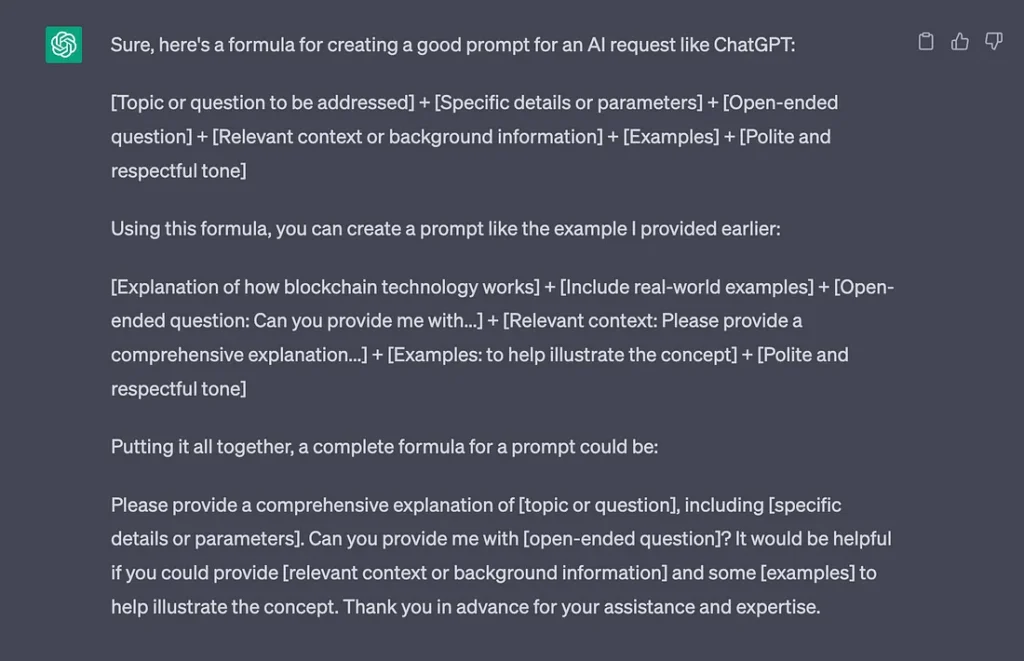

Interestingly, if you ask ChatGPT for a formula to create a good prompt, it includes “Polite and respectful tone as an essential part.

The end of CAPTCHAs?

New research has found that AI bots are faster and better at solving puzzles designed to detect bots than humans are.

CAPTCHAs are those annoying little puzzles that ask you to pick out the fire hydrants or interpret some wavy illegible text to prove you are a human. But as the bots got smarter over the years, the puzzles became more and more difficult.

Also read: Apple developing pocket AI, deep fake music deal, hypnotizing GPT-4

Now researchers from the University of California and Microsoft have found that AI bots can solve the problem half a second faster with an 85% to 100% accuracy rate, compared with humans who score 50% to 85%.

So it looks like we are going to have to verify humanity some other way, as Elon Musk keeps saying. There are better solutions than paying him $8, though.

Wired argues that fake AI child porn could be a good thing

Wired has asked the question that nobody wanted to know the answer to: Could AI-Generated Porn Help Protect Children? While the article calls such imagery “abhorrent,” it argues that photorealistic fake images of child abuse might at least protect real children from being abused in its creation.

“Ideally, psychiatrists would develop a method to cure viewers of child pornography of their inclination to view it. But short of that, replacing the market for child pornography with simulated imagery may be a useful stopgap.”

Its a super-controversial argument and one thats almost certain to go nowhere, given theres been an ongoing debate spanning decades over whether adult pornography (which is a much less radioactive topic) in general contributes to rape culture and greater rates of sexual violence which anti-porn campaigners argue or if porn might even reduce rates of sexual violence, as supporters and various studies appear to show.

Child porn pours gas on a fire, high-risk offender psychologist Anna Salter told Wired, arguing that continued exposure can reinforce existing attractions by legitimizing them.

But the article also reports some (inconclusive) research suggesting some pedophiles use pornography to redirect their urges and find an outlet that doesnt involve directly harming a child.

Louisana recently outlawed the possession or production of AI-generated fake child abuse images, joining a number of other states. In countries like Australia, the law makes no distinction between fake and real child pornography and already outlaws cartoons.

Amazons AI summaries are net positive

Amazon has rolled out AI-generated review summaries to some users in the United States. On the face of it, this could be a real time saver, allowing shoppers to find out the distilled pros and cons of products from thousands of existing reviews without reading them all.

But how much do you trust a massive corporation with a vested interest in higher sales to give you an honest appraisal of reviews?

Also read: AIs trained on AI content go MAD, is Threads a loss leader for AI data?

Amazon already defaults to most helpful’ reviews, which are noticeably more positive than most recent reviews. And the select group of mobile users with access so far have already noticed more pros are highlighted than cons.

Search Engine Journals Kristi Hines takes the merchants side and says summaries could “oversimplify perceived product problems” and “overlook subtle nuances like user error” that “could create misconceptions and unfairly harm a sellers reputation.” This suggests Amazon will be under pressure from sellers to juice the reviews.

So Amazon faces a tricky line to walk: being positive enough to keep sellers happy but also including the flaws that make reviews so valuable to customers.

Microsofts must-see food bank

Microsoft was forced to remove a travel article about Ottawas 15 must-see sights that listed the “beautiful” Ottawa Food Bank at number three. The entry ends with the bizarre tagline, “Life is already difficult enough. Consider going into it on an empty stomach.”

Microsoft claimed the article was not published by an unsupervised AI and blamed “human error” for the publication.

“In this case, the content was generated through a combination of algorithmic techniques with human review, not a large language model or AI system. We are working to ensure this type of content isn’t posted in future.”

Debate over AI and job losses continues

What everyone wants to know is whether AI will cause mass unemployment or simply change the nature of jobs? The fact that most people still have jobs despite a century or more of automation and computers suggests the latter, and so does a new report from the United Nations International Labour Organization.

Most jobs are more likely to be complemented rather than substituted by the latest wave of generative AI, such as ChatGPT, the report says.

The greatest impact of this technology is likely to not be job destruction but rather the potential changes to the quality of jobs, notably work intensity and autonomy.

It estimates around 5.5% of jobs in high-income countries are potentially exposed to generative AI, with the effects disproportionately falling on women (7.8% of female employees) rather than men (around 2.9% of male employees). Admin and clerical roles, typists, travel consultants, scribes, contact center information clerks, bank tellers, and survey and market research interviewers are most under threat.

Also read: AI travel booking hilariously bad, 3 weird uses for ChatGPT, crypto plugins

A separate study from Thomson Reuters found that more than half of Australian lawyers are worried about AI taking their jobs. But are these fears justified? The legal system is incredibly expensive for ordinary people to afford, so it seems just as likely that cheap AI lawyer bots will simply expand the affordability of basic legal services and clog up the courts.

How companies use AI today

There are a lot of pie-in-the-sky speculative use cases for AI in 10 years’ time, but how are big companies using the tech now? The Australian newspaper surveyed the countrys biggest companies to find out. Online furniture retailer Temple & Webster is using AI bots to handle pre-sale inquiries and is working on a generative AI tool so customers can create interior designs to get an idea of how its products will look in their homes.

Treasury Wines, which produces the prestigious Penfolds and Wolf Blass brands, is exploring the use of AI to cope with fast changing weather patterns that affect vineyards. Toll road company Transurban has automated incident detection equipment monitoring its huge network of traffic cameras.

Sonic Healthcare has invested in Harrison.ai’s cancer detection systems for better diagnosis of chest and brain X-rays and CT scans. Sleep apnea device provider ResMed is using AI to free up nurses from the boring work of monitoring sleeping patients during assessments. And hearing implant company Cochlear is using the same tech Peter Jackson used to clean up grainy footage and audio for The Beatles: Get Back documentary for signal processing and to eliminate background noise for its hearing products.

All killer, no filler AI news

Six entertainment companies, including Disney, Netflix, Sony and NBCUniversal, have advertised 26 AI jobs in recent weeks with salaries ranging from $200,000 to $1 million.

New research published in Gastroenterology journal used AI to examine the medical records of 10 million U.S. veterans. It found the AI is able to detect some esophageal and stomach cancers three years prior to a doctor being able to make a diagnosis.

Meta has released an open-source AI model that can instantly translate and transcribe 100 different languages, bringing us ever closer to a universal translator.

The New York Times has blocked OpenAI’s web crawler from reading and then regurgitating its content. The NYT is also considering legal action against OpenAI for intellectual property rights violations.

Pictures of the week

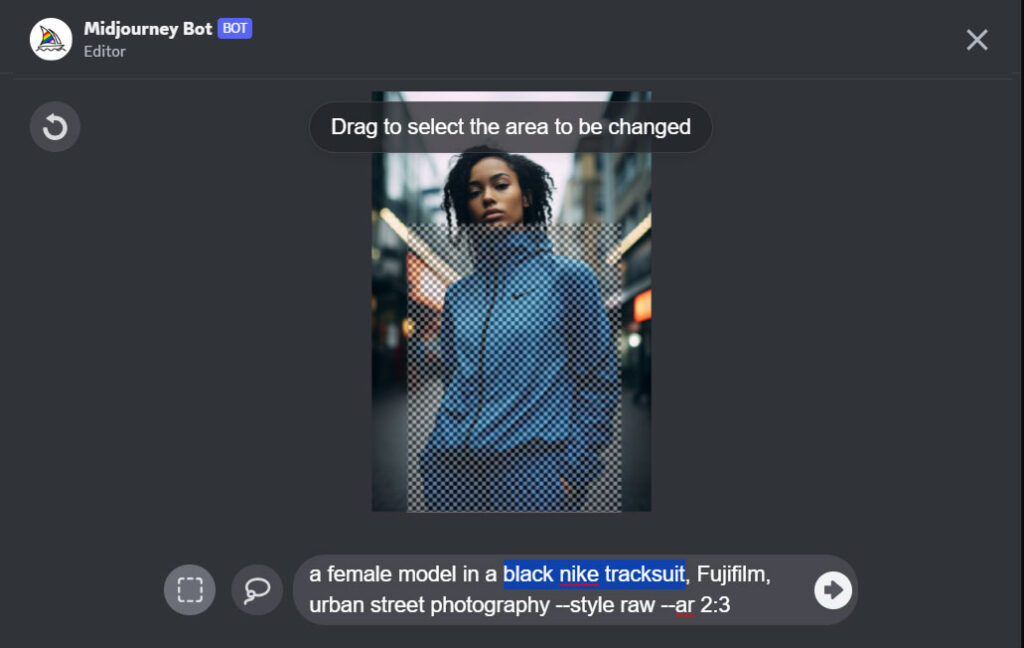

Midjourney has caught up with Stable Diffusion and Adobe and now offers Inpainting, which appears as Vary (region) in the list of tools. It enables users to select part of an image and add a new element so, for example, you can grab a pic of a woman, select the region around her hair, type in Christmas hat, and the AI will plonk a hat on her head.

Midjourney admits the feature isnt perfect and works better when used on larger areas of an image (20%-50%) and for changes that are more sympathetic to the original image rather than basic and outlandish.

Creepy AI protests video

Asking an AI to create a video of protests against AIs resulted in this creepy video that will turn you off AI forever.

New AI piece.

— Javi Lopez (@javilopen) August 18, 2023

"Protest against AI"

A fun afternoon participating in a protest against the AI bros, burning robots, and even enjoying the appearance of Godzilla. We had such a great time! pic.twitter.com/OhKDYPSS0E