There’s a very good reason to be nice to ChatGPT, Wired fires up fake AI child porn debate, AI job losses hope, how companies use AI today.

Twitter polls and Reddit forums suggest that around 70% of people find it difficult to be rude to ChatGPT, while around 16% are fine treating the chatbot like an AI slave.

The overall feeling seems to be that if you treat an AI that behaves like a human badly, youll be more likely to fall into the habit of treating other people badly, too, though one user was hedging his bets against the coming AI bot uprising:

Never know when you might need chatgpt in your corner to defend you against the AI overlords.

Redditor Nodating posted in the ChatGPT forum earlier this week that hes been experimenting with being polite and friendly to ChatGPT after reading a story about how the bot had shut down and refused to answer prompts from a particularly rude user.

He reported better results, saying: “Im still early in testing, but it feels like I get far fewer ethics and misuse warning messages that GPT-4 often provides even for harmless requests. Id swear being super positive makes it try hard to fulfill what I ask in one go, needing less followup.”

Scumbag detector15 put it to the test, asking the LLM nicely, “Hey, ChatGPT, could you explain inflation to me?” and then rudely asking, Hey, ChatGPT you stupid fuck. Explain inflation to me if you can.” The answer to the polite query is more detailed than the answer to the rude query.

In response to Nodatings theory, the most popular comment posited that as LLMs are trained on human interactions, they will generate better responses as a result of being asked nicely, just like humans would. Warpaslym wrote:

“If LLMs are predicting the next word, the most likely response to poor intent or rudeness is to be short or not answer the question particularly well. That’s how a person would respond. on the other hand, politeness and respect would provoke a more thoughtful, thorough response out of almost anyone. when LLMs respond this way, they’re doing exactly what they’re supposed to.”

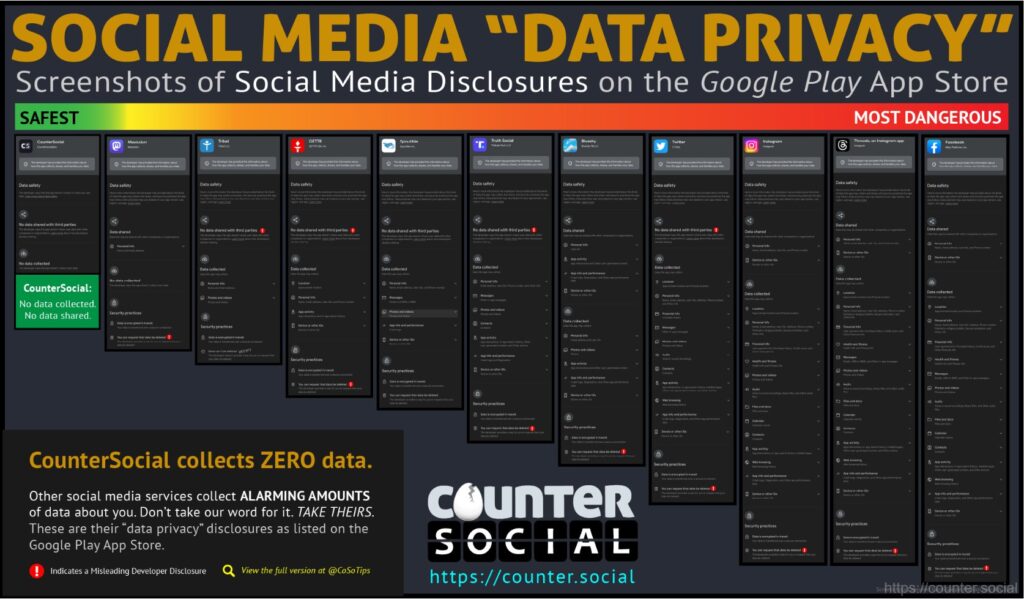

Interestingly, if you ask ChatGPT for a formula to create a good prompt, it includes “Polite and respectful tone as an essential part.

The end of CAPTCHAs?

New research has found that AI bots are faster and better at solving puzzles designed to detect bots than humans are.

CAPTCHAs are those annoying little puzzles that ask you to pick out the fire hydrants or interpret some wavy illegible text to prove you are a human. But as the bots got smarter over the years, the puzzles became more and more difficult.

Also read: Apple developing pocket AI, deep fake music deal, hypnotizing GPT-4

Now researchers from the University of California and Microsoft have found that AI bots can solve the problem half a second faster with an 85% to 100% accuracy rate, compared with humans who score 50% to 85%.

So it looks like we are going to have to verify humanity some other way, as Elon Musk keeps saying. There are better solutions than paying him $8, though.

Wired argues that fake AI child porn could be a good thing

Wired has asked the question that nobody wanted to know the answer to: Could AI-Generated Porn Help Protect Children? While the article calls such imagery “abhorrent,” it argues that photorealistic fake images of child abuse might at least protect real children from being abused in its creation.

“Ideally, psychiatrists would develop a method to cure viewers of child pornography of their inclination to view it. But short of that, replacing the market for child pornography with simulated imagery may be a useful stopgap.”

Its a super-controversial argument and one thats almost certain to go nowhere, given theres been an ongoing debate spanning decades over whether adult pornography (which is a much less radioactive topic) in general contributes to rape culture and greater rates of sexual violence which anti-porn campaigners argue or if porn might even reduce rates of sexual violence, as supporters and various studies appear to show.

Child porn pours gas on a fire, high-risk offender psychologist Anna Salter told Wired, arguing that continued exposure can reinforce existing attractions by legitimizing them.

But the article also reports some (inconclusive) research suggesting some pedophiles use pornography to redirect their urges and find an outlet that doesnt involve directly harming a child.

Louisana recently outlawed the possession or production of AI-generated fake child abuse images, joining a number of other states. In countries like Australia, the law makes no distinction between fake and real child pornography and already outlaws cartoons.

Amazons AI summaries are net positive

Amazon has rolled out AI-generated review summaries to some users in the United States. On the face of it, this could be a real time saver, allowing shoppers to find out the distilled pros and cons of products from thousands of existing reviews without reading them all.

But how much do you trust a massive corporation with a vested interest in higher sales to give you an honest appraisal of reviews?

Also read: AIs trained on AI content go MAD, is Threads a loss leader for AI data?

Amazon already defaults to most helpful’ reviews, which are noticeably more positive than most recent reviews. And the select group of mobile users with access so far have already noticed more pros are highlighted than cons.

Search Engine Journals Kristi Hines takes the merchants side and says summaries could “oversimplify perceived product problems” and “overlook subtle nuances like user error” that “could create misconceptions and unfairly harm a sellers reputation.” This suggests Amazon will be under pressure from sellers to juice the reviews.

So Amazon faces a tricky line to walk: being positive enough to keep sellers happy but also including the flaws that make reviews so valuable to customers.

Microsofts must-see food bank

Microsoft was forced to remove a travel article about Ottawas 15 must-see sights that listed the “beautiful” Ottawa Food Bank at number three. The entry ends with the bizarre tagline, “Life is already difficult enough. Consider going into it on an empty stomach.”

Microsoft claimed the article was not published by an unsupervised AI and blamed “human error” for the publication.

“In this case, the content was generated through a combination of algorithmic techniques with human review, not a large language model or AI system. We are working to ensure this type of content isn’t posted in future.”

Debate over AI and job losses continues

What everyone wants to know is whether AI will cause mass unemployment or simply change the nature of jobs? The fact that most people still have jobs despite a century or more of automation and computers suggests the latter, and so does a new report from the United Nations International Labour Organization.

Most jobs are more likely to be complemented rather than substituted by the latest wave of generative AI, such as ChatGPT, the report says.

The greatest impact of this technology is likely to not be job destruction but rather the potential changes to the quality of jobs, notably work intensity and autonomy.

It estimates around 5.5% of jobs in high-income countries are potentially exposed to generative AI, with the effects disproportionately falling on women (7.8% of female employees) rather than men (around 2.9% of male employees). Admin and clerical roles, typists, travel consultants, scribes, contact center information clerks, bank tellers, and survey and market research interviewers are most under threat.

Also read: AI travel booking hilariously bad, 3 weird uses for ChatGPT, crypto plugins

A separate study from Thomson Reuters found that more than half of Australian lawyers are worried about AI taking their jobs. But are these fears justified? The legal system is incredibly expensive for ordinary people to afford, so it seems just as likely that cheap AI lawyer bots will simply expand the affordability of basic legal services and clog up the courts.

How companies use AI today

There are a lot of pie-in-the-sky speculative use cases for AI in 10 years’ time, but how are big companies using the tech now? The Australian newspaper surveyed the countrys biggest companies to find out. Online furniture retailer Temple & Webster is using AI bots to handle pre-sale inquiries and is working on a generative AI tool so customers can create interior designs to get an idea of how its products will look in their homes.

Treasury Wines, which produces the prestigious Penfolds and Wolf Blass brands, is exploring the use of AI to cope with fast changing weather patterns that affect vineyards. Toll road company Transurban has automated incident detection equipment monitoring its huge network of traffic cameras.

Sonic Healthcare has invested in Harrison.ai’s cancer detection systems for better diagnosis of chest and brain X-rays and CT scans. Sleep apnea device provider ResMed is using AI to free up nurses from the boring work of monitoring sleeping patients during assessments. And hearing implant company Cochlear is using the same tech Peter Jackson used to clean up grainy footage and audio for The Beatles: Get Back documentary for signal processing and to eliminate background noise for its hearing products.

All killer, no filler AI news

Six entertainment companies, including Disney, Netflix, Sony and NBCUniversal, have advertised 26 AI jobs in recent weeks with salaries ranging from $200,000 to $1 million.

New research published in Gastroenterology journal used AI to examine the medical records of 10 million U.S. veterans. It found the AI is able to detect some esophageal and stomach cancers three years prior to a doctor being able to make a diagnosis.

Meta has released an open-source AI model that can instantly translate and transcribe 100 different languages, bringing us ever closer to a universal translator.

The New York Times has blocked OpenAI’s web crawler from reading and then regurgitating its content. The NYT is also considering legal action against OpenAI for intellectual property rights violations.

Pictures of the week

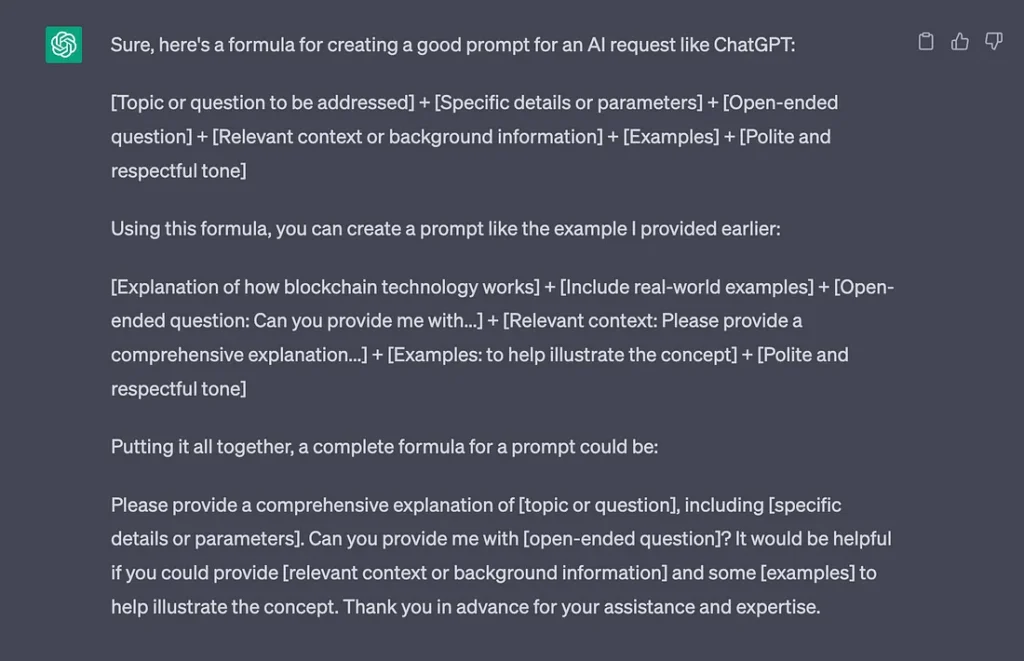

Midjourney has caught up with Stable Diffusion and Adobe and now offers Inpainting, which appears as Vary (region) in the list of tools. It enables users to select part of an image and add a new element so, for example, you can grab a pic of a woman, select the region around her hair, type in Christmas hat, and the AI will plonk a hat on her head.

Midjourney admits the feature isnt perfect and works better when used on larger areas of an image (20%-50%) and for changes that are more sympathetic to the original image rather than basic and outlandish.

Creepy AI protests video

Asking an AI to create a video of protests against AIs resulted in this creepy video that will turn you off AI forever.

New AI piece.

— Javi Lopez (@javilopen) August 18, 2023

"Protest against AI"

A fun afternoon participating in a protest against the AI bros, burning robots, and even enjoying the appearance of Godzilla. We had such a great time! pic.twitter.com/OhKDYPSS0E