By Michael Li, Vice President, Data at Coinbase

Hackathons have been a long-standing and important part of Coinbase culture, as they give our engineering teams the opportunity to collaborate with one another and experiment directly with the tools that are enabling a new era of open finance.

At Coinbase, we acknowledge that Web3 unlocks a whole new realm of possibilities for developers that are largely yet to be explored. In order to pursue these possibilities confidently, engineers need to have a baseline knowledge of the ecosystem, the Web3 stack, and key smart contract concepts across different blockchain protocols.

This year, we used the time set aside for the annual Coinbase hackathon to kick off Smart Contract Hack Days to give all of our engineers a crash course in Web3 development for real-world applications.

Time to BUIDL

In December, we assigned all participants to project pods of 5–10 individuals which were led by an experienced Coinbase team member that had been through in-depth blockchain engineering training. Following a day-long crash course in Solidity (developer tools and workflow), each pod had 48 hours to build a demo that would be judged on product, engineering, and design.

Participating pods had a chance to score one of the eight awards and crypto-forward prizes. Award categories included People’s Choice (selected by the entire audience), Learning Showcase (the pod that demonstrated the most learning through working on their project), Judges Choice (overall judge favorites), Best Executed (evaluates quality, teamwork and overall execution), and Most Creative (the most exciting and creative take on hack day guidelines).

Hack Day Showstoppers

Among the 44 pods that presented on Smart Contract Hack Demo Day, the top 5 categories of project submissions spanned Web3 infrastructure, gaming, DAOs, NFTs, and event ticketing.

While there were many strong ideas presented, some of the key ones to highlight (along with their taglines) include:

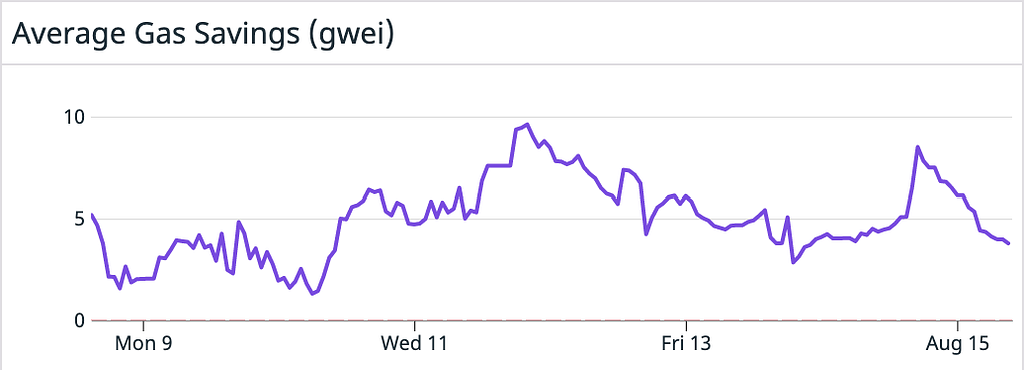

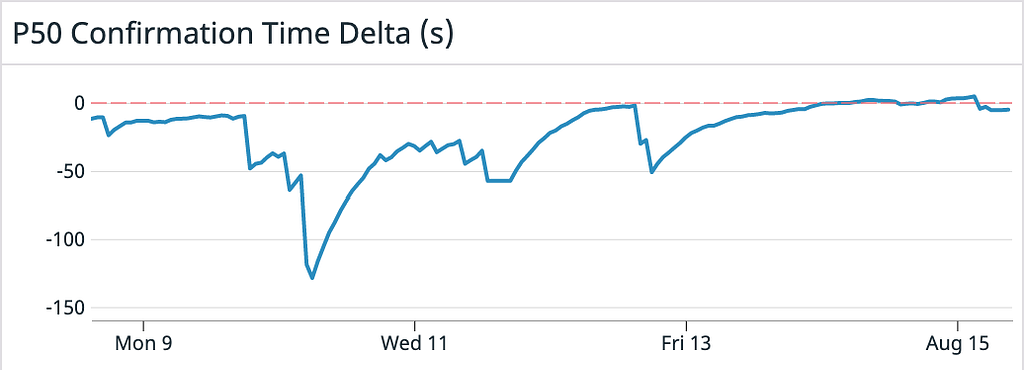

- (Gas)tly: Complete gas transactions with confidence

- Concert-AMWest-2: A NFT concert ticket and a marketplace contract that can transfer funds from the buyer to the marketplace and royalty to the musician

- GenEd Labs: A charitable giving DAO that enables the ethical investor to leverage the blockchain to close the skill gap within underserved communities.

- Real-Time Shibas: Capture the yield farms with your Shiba army

- BridgeIt: Pooling deposits together for cost-efficient bridging

- Not So Bored Apes: Decentralizing and revolutionizing the casino world one step at a time

What We’ve Learned After Experiencing a Day in the Life of a Web3 Dev

We were inspired by the number of creative product ideas that were presented during this time and took many important insights away to be applied for future training and hackathons.

Some of these insights include:

- Teams need more focus time to execute — many projects felt they lacked adequate time to execute due to competing priorities from day-to-day work. We will improve by providing full dedicated time for all participants in the future.

- Participants would like more autonomy in team creation — team dynamics are important. Finding teammates who share a similar vision or vibe or may have complementary work styles and perspectives can go a long way, especially in high-pressure scenarios.

- Save room for ideation — rather than being assigned to projects, participants may feel more engaged or motivated to take an idea over the finish line if they feel more passionate about what they’re building.

What’s Next?

One of the most exciting things about Web3 is its limitless potential. It is likely that it will touch every single industry — whether that be as the infrastructure that underpins a wave of new products or as the tool for interacting with brands and businesses in a more trustless and equitable way. This year’s hackathon, in many ways, is a testament to the efforts needed to onboard developers from the world of Web2 to Web3.

At Coinbase, we remain optimistic about the future of the industry and are committed to spearheading new initiatives that will allow our teams to continue learning, creating and building together.

We are always looking for top talent to join our ever-growing team and #LiveCrypto. Learn more about open positions on our website.

Highlights from Coinbase’s First Smart Contract Hack Days was originally published in The Coinbase Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.