Phishing attacks are on the rise — here are some steps you can take to protect yourself

Tl;Dr: As we have discussed in previous blogs, at Coinbase we believe that a healthy and safe crypto industry is critical to growing and maturing the cryptoeconomy. Part of that means sharing information that we believe is useful to protect consumers broadly across the industry. It’s for this reason that we’re sharing the details of a recent large-scale Coinbase-branded phishing campaign that we believe impacted our customers, and many others.

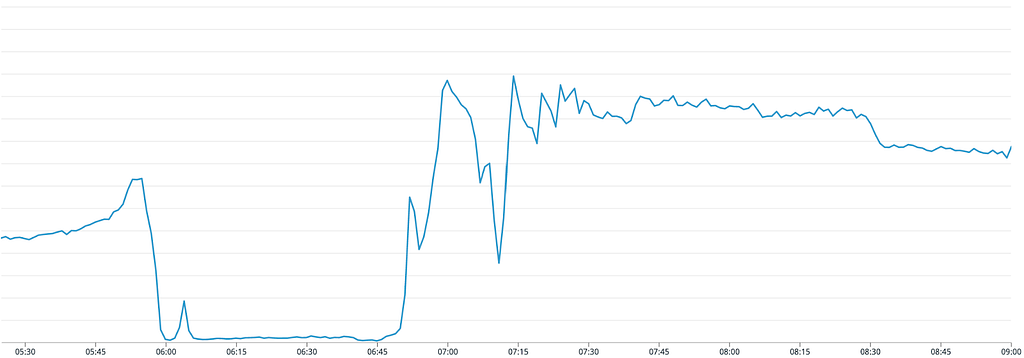

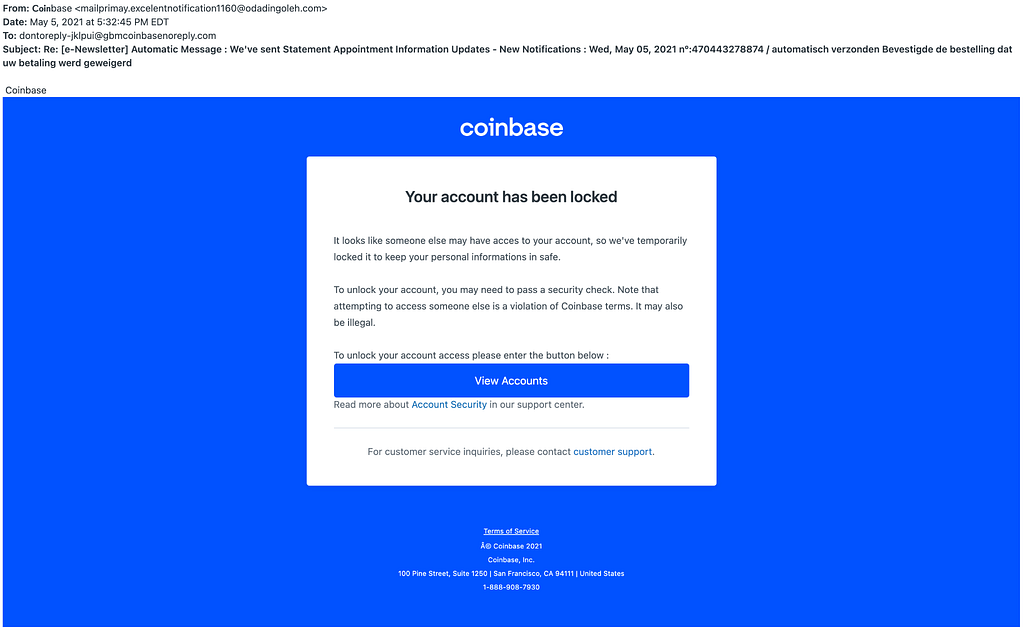

Between April and early May 2021, the Coinbase security team observed a significant uptick in Coinbase-branded phishing messages targeting users of a range of commonly used email service providers (you can learn more about phishing in our Help Center.) Though the attack was broad, it demonstrated a higher degree of success bypassing the spam filters of certain older email services.

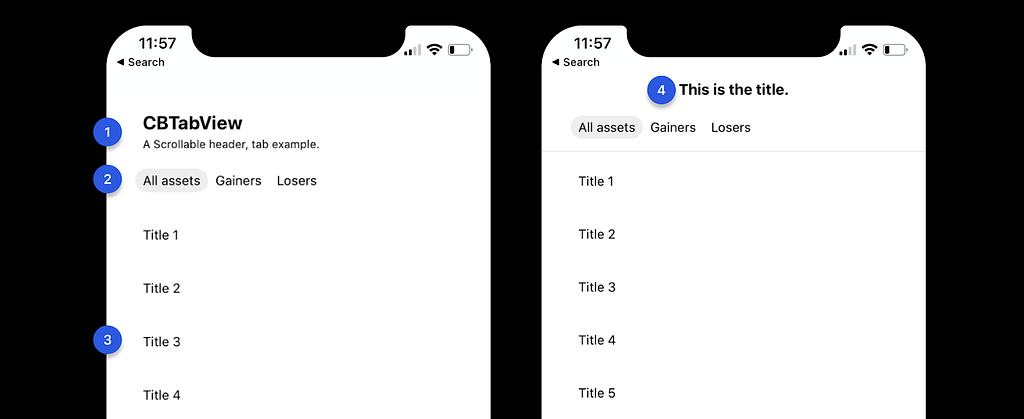

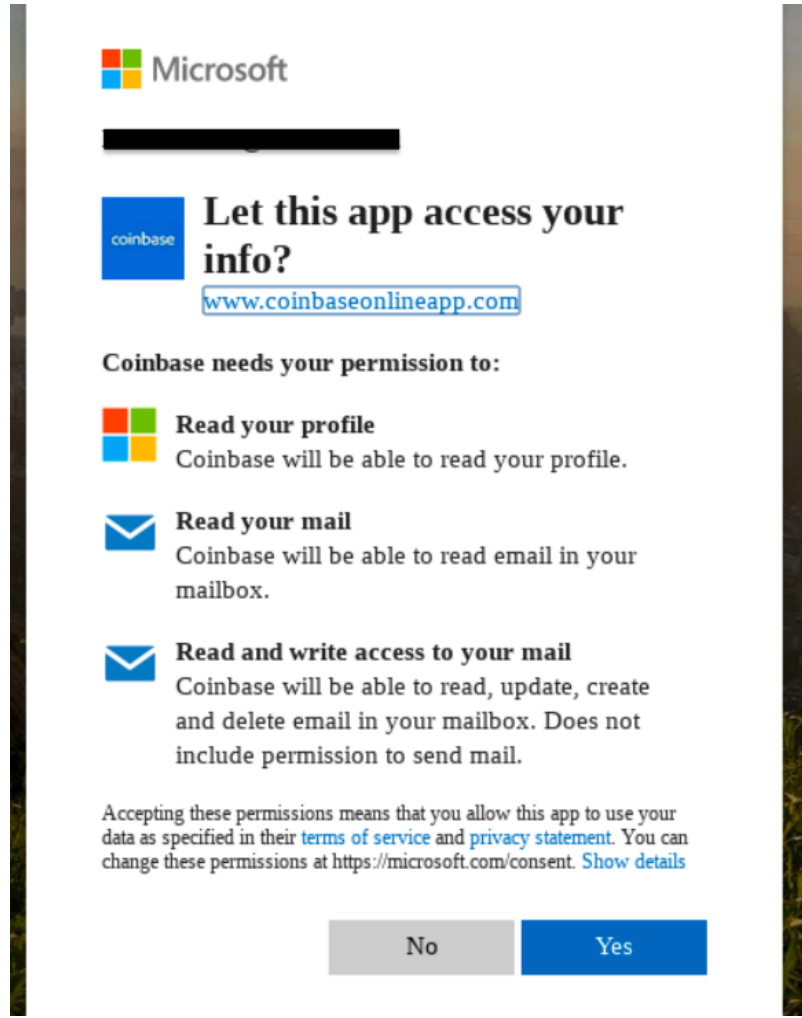

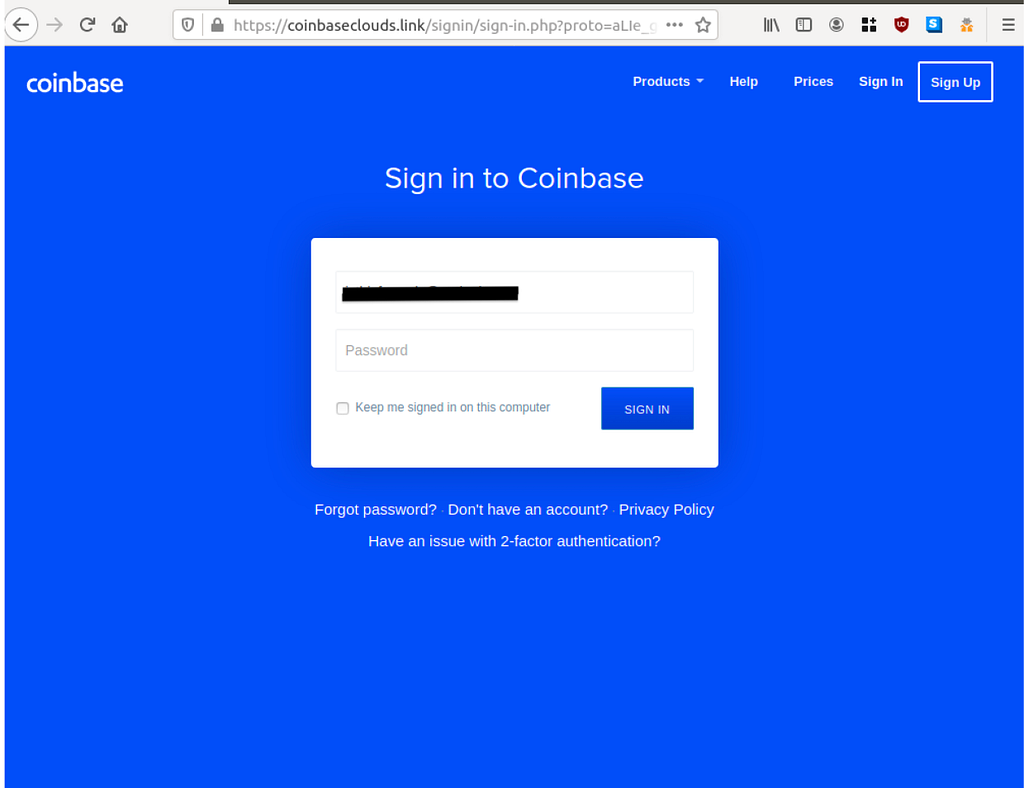

The messages used a wide variety of different subject lines, senders, and content. It sometimes sent multiple variations to the same victims. Depending on the variant of email received, different techniques to steal credentials were used as well. The following screenshots show a representative victim experience, but wouldn’t necessarily have been seen in exactly this order by all victims.

Once the attackers had compromised the user’s email inbox and their Coinbase credentials, in a small number of cases they were able to use that information to impersonate the user to gain access to their Coinbase accounts and bypass our SMS two-factor authentication system. With access to these accounts, the attacker was able to transfer funds to crypto wallets unassociated with Coinbase. We have made changes to prevent this from happening in the future.

Once we learned of the attack, we took a number of steps to protect our customers, including working with external security partners to take down malicious domains and websites associated with the phishing campaign, as well as notifying the email service providers most impacted by the attack.

While attacks like this have the potential to cause significant harm to their victims, taking a few common-sense security precautions can dramatically reduce their efficacy. Below we lay out a few best practices that everyone should follow to keep their various online accounts safe.

Strong passwords

Passwords are the front door locks to your online applications, but far too many people fail when it comes to basic password best practices. In fact, according to a recent study, more than 50% of those polled reused passwords across multiple accounts. If a password is the front door lock to your digital life, reusing a password is like having the same key to your house, car, mailbox and place of work. In other words, reusing a password is barely better than having no password at all. Strong passwords should have a combination of letters (both upper and lower case), numbers and special characters, and be at least 10 characters long. Many password managers will suggest options that meet these standards.

Use a password manager

If you’re following security best practices, you should be using unique, strong passwords for every site you visit, making it impossible to remember your credentials for all of your online accounts. This is where password managers like 1Password and Dashlane become invaluable. Not only will a password manager suggest strong, randomly generated passwords for you, many of them have built-in features that help prevent entering your password on the wrong website — such as those used for phishing campaigns such as the one described above.

Two-Factor Authentication

After choosing a strong, unique password, enabling two-factor authentication (2FA) is the most important way to secure your online accounts. 2FA, also known as 2-step verification, is a security layer in addition to your username and password. With 2FA enabled on your account, you will have to provide your password (first “factor”) and your 2FA code (second “factor”) when signing in to your account. There are many types of 2FA, ranging from a physical key (such as a YubiKey) — the most secure — to SMS verification — the least secure. Many people choose to use SMS 2FA, because it’s linked to a phone number, rather than to one particular device, and is generally the easiest to set up and to use. Unfortunately, that same level of convenience also makes it easier for persistent attackers to intercept your 2FA codes. We strongly encourage everyone that currently uses SMS as a secondary authentication method to upgrade to stronger methods like Google Authenticator or a security key everywhere it is supported.

Question everything

Coinbase, like most financial institutions or FinTech companies, will never contact you asking for your password, two-factor authentication codes, or to take actions like installing new software or sending funds to a cryptocurrency address. Whenever you receive a communication from a company that you have dealings with, check that it is what you think it is. Was it sent from a domain that is consistent with the company? (i.e., help@coinbase.com as opposed to support.coinbase@gmail.com). Is the landing page accurate? (paypal.com as opposed to secure-paypal[.]com). Is the content in the communication or on the website in the same style and of the same quality as previous communications? Many scam sites will have typos, use old logos or have a different feel than their genuine counterparts.

Coinbase provides a number of resources to help our customers avoid online scams and report potential malicious activity. Cryptocurrency transactions are irreversible. If you (or a hacker who has accessed your account illegitimately) send cryptocurrency to a third party, it cannot be reversed or stopped. When you send cryptocurrency to a blockchain address, you must be certain of the legitimacy of any involved third party services and merchants, and only send cryptocurrency to entities you trust. If you’re ever in doubt, do some research and never send funds or share information unless you are 100% certain that you are interacting with who you think you are.

Phishing attacks are on the rise — here are some steps you can take to protect yourself was originally published in The Coinbase Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.